fab13

- 300

- 7

I make confusions in the using of Maximum Likelihood to find (approximately) the original signal knowing the data observed and the using of Maximum Likelihood to find estimations of parameters of the PSF

1) Find (up to some point) the original signal :

I start from this general definition (in discretized) : ##y=H\,x+w\quad(1)## (with ##w## a white noise)

How can I demonstrate this relation ? (it seems that we should start from a discrete convolution product, doesn't it ? Then, the correct expression would be rather : ##y=H*x+w## with ##*## the product covolution)

For estimation, I have to maximalize the likelihood function :

##\phi(x) = (H \cdot x - y)^{T} \cdot W \cdot (H \cdot x - y)##

with ##H## the PSF, ##W## the inverse of covariance matrix (of data ##y##), and ##y## data observed : the goal is to find ##x## original signal.

So the estimator is given by : ##x^{\text{(ML)}} = (H^{T}\cdot W\cdot H)^{-1}\cdot H^{T}\cdot W \cdot y\quad(2)##

For this task, I don't know practically how to compute this estimator ##x^{\text{(ML)}}## ? is equation (2) correct ?

2) Second task : next step, I have to find the parameters on the function with ##\theta=[a,b]## parameter vector : this allow to find the best parameters of PSF and gives the best fit as a function of data ##y##

Is this step well formulated ?

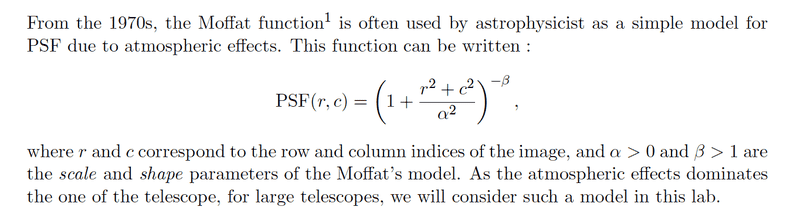

I am working with the following PSF :

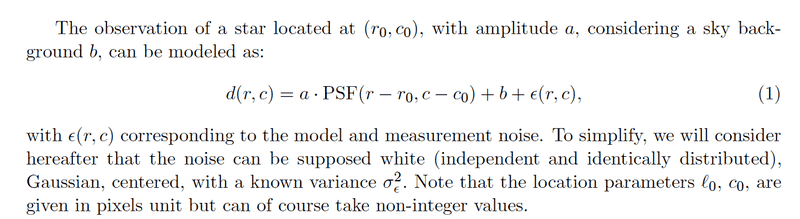

And for this second task, I have to find the parameters ##a## and ##b## of :

knowing (##r_0,c_0##)

In practise, I have used on Matlab the function `\` to perform the Least Mean squares method between the data observed (PSF without noise) and the raw data (the PSF with noise).

In this way, I can find the two affine parameters ##a## and ##b## (actually, I think this is called a linear regression).

I saw that we could take the vector of parameters ##\theta[a,b]## and use the following relation :

##y=H\theta + w\quad (3)##

(with H the response of the system, ##\theta## the vector of parameters to estimate and w the white noise. How to demonstrate this important relation ? and what's the difference between ##(1)## and ##(3)## ?

Finally, I need remarks or help to knowing what the differences between the 2 tasks and what is the right method to apply for each one.

Regards

1) Find (up to some point) the original signal :

I start from this general definition (in discretized) : ##y=H\,x+w\quad(1)## (with ##w## a white noise)

How can I demonstrate this relation ? (it seems that we should start from a discrete convolution product, doesn't it ? Then, the correct expression would be rather : ##y=H*x+w## with ##*## the product covolution)

For estimation, I have to maximalize the likelihood function :

##\phi(x) = (H \cdot x - y)^{T} \cdot W \cdot (H \cdot x - y)##

with ##H## the PSF, ##W## the inverse of covariance matrix (of data ##y##), and ##y## data observed : the goal is to find ##x## original signal.

So the estimator is given by : ##x^{\text{(ML)}} = (H^{T}\cdot W\cdot H)^{-1}\cdot H^{T}\cdot W \cdot y\quad(2)##

For this task, I don't know practically how to compute this estimator ##x^{\text{(ML)}}## ? is equation (2) correct ?

2) Second task : next step, I have to find the parameters on the function with ##\theta=[a,b]## parameter vector : this allow to find the best parameters of PSF and gives the best fit as a function of data ##y##

Is this step well formulated ?

I am working with the following PSF :

And for this second task, I have to find the parameters ##a## and ##b## of :

knowing (##r_0,c_0##)

In practise, I have used on Matlab the function `\` to perform the Least Mean squares method between the data observed (PSF without noise) and the raw data (the PSF with noise).

In this way, I can find the two affine parameters ##a## and ##b## (actually, I think this is called a linear regression).

I saw that we could take the vector of parameters ##\theta[a,b]## and use the following relation :

##y=H\theta + w\quad (3)##

(with H the response of the system, ##\theta## the vector of parameters to estimate and w the white noise. How to demonstrate this important relation ? and what's the difference between ##(1)## and ##(3)## ?

Finally, I need remarks or help to knowing what the differences between the 2 tasks and what is the right method to apply for each one.

Regards

Attachments

Last edited by a moderator: