Math Amateur

Gold Member

MHB

- 3,920

- 48

I am reading Gerard Walschap's book: "Multivariable Calculus and Differential Geometry" and am focused on Chapter 1: Euclidean Space ... ...

I need help with an aspect of the proof of Theorem 1.3.1 ...

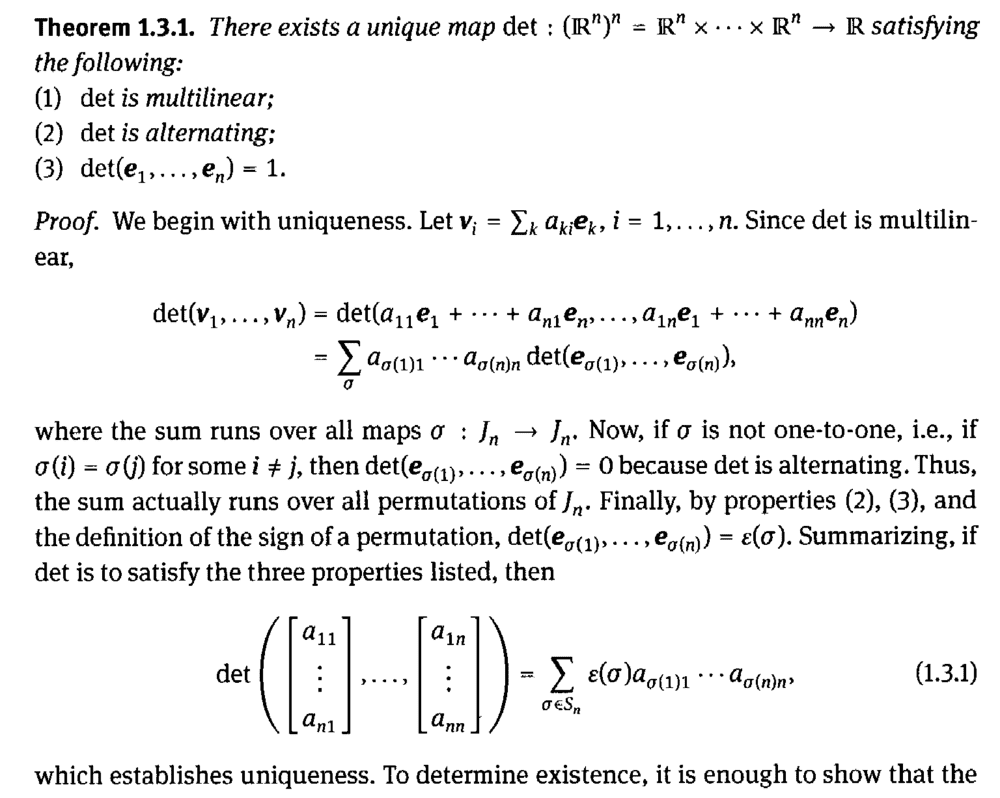

The start of Theorem 1.3.1 and its proof read as follows:

I tried to understand how/why

##\text{det} ( v_1, \cdot \cdot \cdot , v_n ) = \sum_{ \sigma } a_{ \sigma (1) 1 } , \cdot \cdot \cdot , a_{ \sigma (n) n } \ \text{det} ( e_{ \sigma (1) } , \cdot \cdot \cdot , e_{ \sigma (n) }##

where the sum runs over all maps ##\sigma \ : \ J_n = \{ 1 , \cdot \cdot \cdot , n \} \to J_n = \{ 1 , \cdot \cdot \cdot , n \}##

... so ...

... I tried an example with ##\text{det} \ : \ (\mathbb{R}^n)^n \to \mathbb{R}## ... ...

so we have ##v_1 = \sum_k a_{ k1 } e_k = a_{11} e_1 + a_{21} e_2##

and

##v_2 = \sum_k a_{ k2 } e_k = a_{12} e_1 + a_{22} e_2##and then we have

##\text{det} ( v_1, v_2 )####= \text{det} ( a_{11} e_1 + a_{21} e_2 , a_{12} e_1 + a_{22} e_2 )####= a_{11} a_{12} \ \text{det} ( e_1, e_1 ) + a_{11} a_{22} \ \text{det} ( e_1, e_2 ) + a_{21} a_{12} \ \text{det} ( e_2, e_1 ) + a_{21} a_{22} \ \text{det} ( e_2, e_2 )####= \sum_{ \sigma } a_{ \sigma (1) 1 } a_{ \sigma (2) 2 } \ \text{det} ( e_{ \sigma (1) }, e_{ \sigma (2) } ) ##

where the sum runs over all maps

##\sigma \ : \ J_n = \{ 1, 2 \} \to J_n = \{ 1 , 2 \}## ...... ... that is the sum runs over the two permutations

##\sigma_1 = \begin{bmatrix} 1 & 2 \\ 1 & 2 \end{bmatrix}## and ##\sigma_2 = \begin{bmatrix} 1 & 2 \\ 2 & 1 \end{bmatrix}##BUT how does the formula ##\sum_{ \sigma } a_{ \sigma (1) 1 } a_{ \sigma (2) 2 } \ \text{det} ( e_{ \sigma (1) }, e_{ \sigma (2) }## ...

... incorporate or deal with the terms involving ##\text{det} ( e_1, e_1 )## and ##\text{det} ( e_2, e_2 )## ... ... ?Hope someone can help ...?

Peter

I need help with an aspect of the proof of Theorem 1.3.1 ...

The start of Theorem 1.3.1 and its proof read as follows:

I tried to understand how/why

##\text{det} ( v_1, \cdot \cdot \cdot , v_n ) = \sum_{ \sigma } a_{ \sigma (1) 1 } , \cdot \cdot \cdot , a_{ \sigma (n) n } \ \text{det} ( e_{ \sigma (1) } , \cdot \cdot \cdot , e_{ \sigma (n) }##

where the sum runs over all maps ##\sigma \ : \ J_n = \{ 1 , \cdot \cdot \cdot , n \} \to J_n = \{ 1 , \cdot \cdot \cdot , n \}##

... so ...

... I tried an example with ##\text{det} \ : \ (\mathbb{R}^n)^n \to \mathbb{R}## ... ...

so we have ##v_1 = \sum_k a_{ k1 } e_k = a_{11} e_1 + a_{21} e_2##

and

##v_2 = \sum_k a_{ k2 } e_k = a_{12} e_1 + a_{22} e_2##and then we have

##\text{det} ( v_1, v_2 )####= \text{det} ( a_{11} e_1 + a_{21} e_2 , a_{12} e_1 + a_{22} e_2 )####= a_{11} a_{12} \ \text{det} ( e_1, e_1 ) + a_{11} a_{22} \ \text{det} ( e_1, e_2 ) + a_{21} a_{12} \ \text{det} ( e_2, e_1 ) + a_{21} a_{22} \ \text{det} ( e_2, e_2 )####= \sum_{ \sigma } a_{ \sigma (1) 1 } a_{ \sigma (2) 2 } \ \text{det} ( e_{ \sigma (1) }, e_{ \sigma (2) } ) ##

where the sum runs over all maps

##\sigma \ : \ J_n = \{ 1, 2 \} \to J_n = \{ 1 , 2 \}## ...... ... that is the sum runs over the two permutations

##\sigma_1 = \begin{bmatrix} 1 & 2 \\ 1 & 2 \end{bmatrix}## and ##\sigma_2 = \begin{bmatrix} 1 & 2 \\ 2 & 1 \end{bmatrix}##BUT how does the formula ##\sum_{ \sigma } a_{ \sigma (1) 1 } a_{ \sigma (2) 2 } \ \text{det} ( e_{ \sigma (1) }, e_{ \sigma (2) }## ...

... incorporate or deal with the terms involving ##\text{det} ( e_1, e_1 )## and ##\text{det} ( e_2, e_2 )## ... ... ?Hope someone can help ...?

Peter

Attachments

Last edited: