EddiePhys

- 144

- 6

I've always thought of integration as a way to solve differential questions. I'd solve physics problems involving calculus by finding the change in the function df(x) when I increment the independent variable (say x of f(x)) by an infinitesimal amount dx, attaching some physical significance to df = f(x+dx) - f(x) , writing a differential equation relating df and dx. This would give me f'(x) which I would integrate with respect to x to give me the function I was looking for.

I want to know why I can also look at integration as summing up of infinitesimal elements.

For e.g If I want to find the mass of a ring of mass M(redundant, I know) , I can do that by "summing up" infinitesimal elements of mass dm => \int {dm} from 0 to M or summing up \rho Rd\theta from 0 to 2\pi

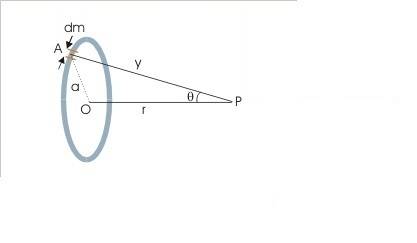

Or if I want to find the total gravitational potential due to a ring:

I could write the small potential dV for an element dm and sum up these dV's using integration to give me the total V due to the ring.

But I want to know why this works/makes sense, mathematically.

Because I think of integration either a way to solve differential equations or as the area under a curve, and I don't understand why the area under the curve(and which curve?) would represent the total potential V or mass M

I want to know why I can also look at integration as summing up of infinitesimal elements.

For e.g If I want to find the mass of a ring of mass M(redundant, I know) , I can do that by "summing up" infinitesimal elements of mass dm => \int {dm} from 0 to M or summing up \rho Rd\theta from 0 to 2\pi

Or if I want to find the total gravitational potential due to a ring:

I could write the small potential dV for an element dm and sum up these dV's using integration to give me the total V due to the ring.

But I want to know why this works/makes sense, mathematically.

Because I think of integration either a way to solve differential equations or as the area under a curve, and I don't understand why the area under the curve(and which curve?) would represent the total potential V or mass M