sophiecentaur said:

Where would you expect to find "linear data"?

All astrophotos start as linear. The analog-to-digital units (ADU) value of a pixel is a linear function of the number of photons striking that pixel, up to the point of saturation at the high end, and down to the quantization and noise at the low end. It means when you plot ADU vs. photon count, there's this big long line in the middle that's a straight line, i.e., linear.

This linear relationship will be sacrificed in the processing, when the nonlinear "stretch" is implemented, not to mention other nonlinear processing such as curves and contrast adjustments. Pretty much every deep sky astrophoto of a JWST image published to the media has a nonlinear stretch applied (in addition to other nonlinear operations), otherwise it wouldn't look like much of anything.

The characteristics of this stretch and curves are chosen subjectively, usually decided by the artistic whims of the person processing the data. And it is the result of these artistic choices that cause dimmer stars to appear point like and brighter stars to have spikes. Had the person made different artistic choices when processing the data, it would change how many stars have artifacts and how many do not.

But it all starts as linear (before the artistic, nonlinear stretch). Even at the dimmer side of the curve, things can still be treated as having a linear relationship with the addition of noise added on top. I.e., the relationship between actual signal and ADU is still linear even in the dimmer regions, in that sense.

sophiecentaur said:

As you point out, using half power radius is a useful tool because half power allows guiding with dim stars but that's not the same as claiming that spikes (which actually go on for ever, theoretically) will all be visible for all stars. Your data near the peak can be considered to be linear but it eventually ends up being non-linear

No. Even if the way that is worded isn't incorrect (which it might be), it's at least misleading.

The characteristics of the diffraction patterns, including the spikes, are not a nonlinear function of intensity. The relationship is linear, all the way down. (And even if we take sensor imperfections into considerations, it's still mostly linear, all the way down.)

Yes, of course there are limitations of the sensor. But that's not the point. We're talking about characteristics of the diffraction pattern (including the spikes) before they even strike the sensor.

The diffraction

pattern itself is not a function of intensity (including the spikes). The shape and size of the pattern are independent of the number of photons reaching the detector. The only thing that changes as you increase the number of photons are the number of photons. That's it.

-----------

Let me explain it another way.

I'm sure you are familiar with the double slit experiment. That's the experiment where when light passes through a pair a slits, and an interference pattern is formed on the backdrop.

As I'm sure you know, the interference pattern is the same regardless of the photon rate. A moderately bright light source will produce the same pattern as the pattern produced if the photons were sent one at a time. Sending more photons only has the effect of more photons; the pattern itself doesn't change, the only difference is the number of photons.

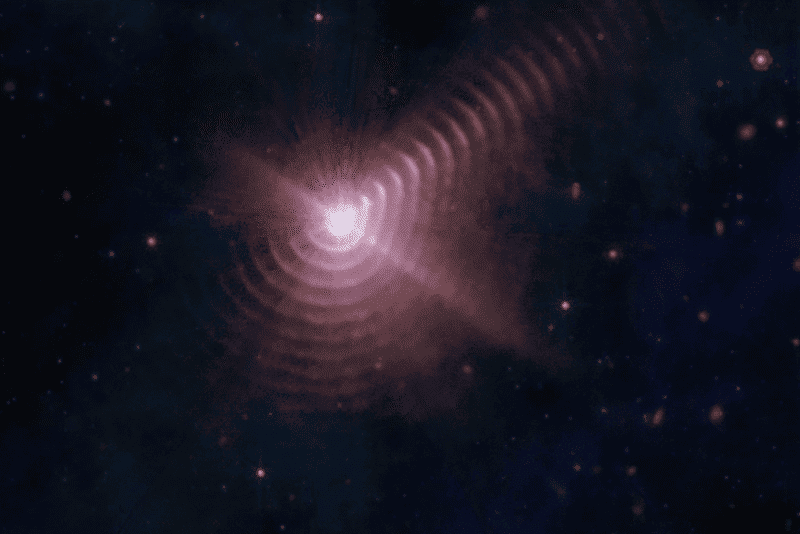

JWST's optics (or the optics of any telescope, for that matter) work the same way. But instead of just having a pair of slits, you have mirrors, struts, hexagonal patterns in the primary mirror, and other obstructions. Taken together, when photons from a light source pass through these optics they too will form an interference pattern when striking the sensor.

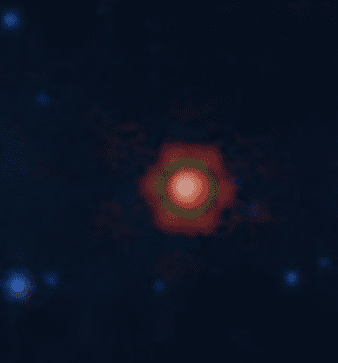

And that interference pattern

is the pattern we see, with the comparatively bright central spot, the somewhat dimmer hexagonal pattern surrounding it, and even the "diffraction spikes." These are all part of the same pattern that every last photon is subject to as it passes through JWST's optics.

One difference to the double slit experiment that should be noted is that in the case of the JWST, photons can come from slightly different directions (not all light sources are along the central axis), which shifts the corresponding interference pattern to differernt parts of the sensor, depending on the direction of the photon source.

But identical to the double slit experiment, photons from a given light source have the same interference pattern regardless of the photon rate. It doesn't matter if many photons pass through in quick succession or pass through slowly, one at a time. The pattern on the sensor is the same.

Claiming that characteristics of the diffraction pattern, including spikes, other than mere photon count, are a function of the star's brightness is simply false. It's akin to saying the interference pattern of the double slit experiment is a function of the photon rate. It's not. The claim is false.

sophiecentaur said:

The bit depth of the cameras appears to be 16, which corresponds to a maximum relative magnitude of about 12, at which point the artefacts will disappear. That implies a star that is exposed at the limit will only display artefacts down to a magnitude of 12, relative to the correctly exposed star.

'Seeing' is excellent at a Lagrange point but scatter (artefacts, if you like) from nearby bright stars can limit how far down you can go and I suspect that that figure of 12 for relative magnitude could be optimistic. Signal to noise ratio can be eaten into by 'interference' / cross talk.

Signal processing can be a big help but that will take us away from a linear situation and could suppress nearby faint stars further.

You lost me here.

- Seeing is caused by the atmosphere, and JWST is not subject to seeing since it is outside the atmosphere.

- Scattering? Are you suggesting light from far away stars is bouncing off nearby stars? I'm not sure what you mean here.

- Interference and crosstalk? You really lost me on that. In conventional communication systems interference and crosstalk can be caused by nonlinear components in the system, but for JWST, the signal is completely linear all the way to the sensor, and then still mostly linear (saturation being an exception) up until the point of the artistic "strech" -- the stretch that's performed before the image is posted to the media. But before that, everything is linear.