- #1

- 11,308

- 8,732

Greg Bernhardt submitted a new PF Insights post

How to Better Define Information in Physics

Continue reading the Original PF Insights Post.

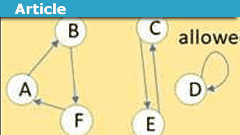

How to Better Define Information in Physics

Continue reading the Original PF Insights Post.