- #1

Angella

- 2

- 0

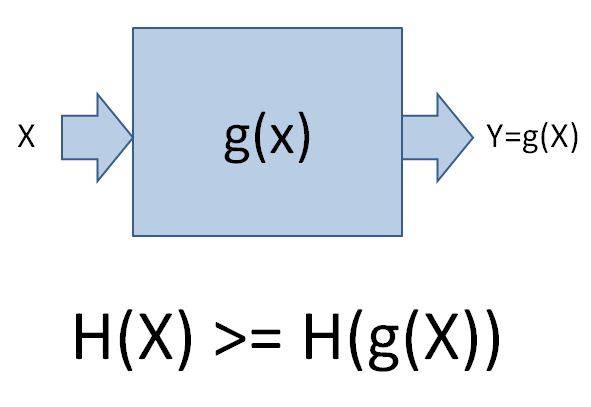

We Now From Information Theory That Entropy Of Functions Of A Random Variable X Is Less Than Or Equal To The Entropy Of X.

Does It Break The Second Law Of Thermodynamic?

Does It Break The Second Law Of Thermodynamic?