kubaanglin

- 47

- 5

Hello Physics Forums,

After about one year of research and construction, I have nearly finished building a functioning inertial electrostatic confinement fusion reactor. Just to be clear, I do not wish to discuss the dangerous activities that are involved with my project as I know such topics are prohibited on this forum. My question is purely related to a recent leak test I recorded.

For reference, here is my reactor generating a 7 kV plasma at around 60 microns of mercury:

Here is the plasma at a lower pressure of 10 microns of mercury:

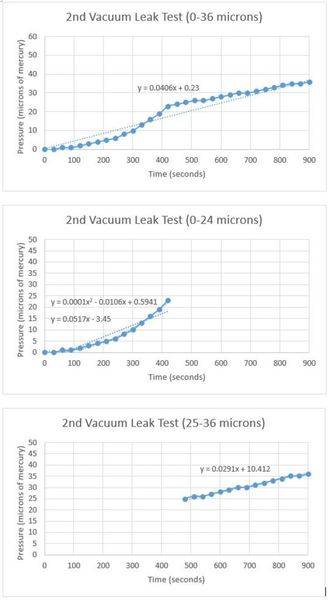

For the leak test, I first evacuated the entire vacuum system using only the mechanical pump to a pressure of 0 microns of mercury. This pressure was read by an electronic gauge that can accurately read pressures within one micron. Then, I closed the gate valve and started my stopwatch. As air began leaking back into the main chamber, I recorded the pressure every 30 seconds. Here is the data I recorded:

I have repeated this test multiple times and I can verify that the results are consistent. When the pressure is below 25 microns, the leak rate follows a quadratic curve. When the pressure in the chamber is above 25 microns, the leak rate suddenly slows and becomes constant. Could 25 microns be the transition point between free molecular flow and viscous flow within my reactor? I asked my AP physics teacher, but he was not certain this was the cause. I don't think off-gassing has anything to do with this, but it does seem like a possibility.

I would greatly appreciate any ideas that might explain this data.

I wasn't sure which "thread level" to tag this thread, so I put "high school" as I am a high school student. If this is wrong, please let me know.

Thanks,

Kuba

After about one year of research and construction, I have nearly finished building a functioning inertial electrostatic confinement fusion reactor. Just to be clear, I do not wish to discuss the dangerous activities that are involved with my project as I know such topics are prohibited on this forum. My question is purely related to a recent leak test I recorded.

For reference, here is my reactor generating a 7 kV plasma at around 60 microns of mercury:

Here is the plasma at a lower pressure of 10 microns of mercury:

For the leak test, I first evacuated the entire vacuum system using only the mechanical pump to a pressure of 0 microns of mercury. This pressure was read by an electronic gauge that can accurately read pressures within one micron. Then, I closed the gate valve and started my stopwatch. As air began leaking back into the main chamber, I recorded the pressure every 30 seconds. Here is the data I recorded:

I have repeated this test multiple times and I can verify that the results are consistent. When the pressure is below 25 microns, the leak rate follows a quadratic curve. When the pressure in the chamber is above 25 microns, the leak rate suddenly slows and becomes constant. Could 25 microns be the transition point between free molecular flow and viscous flow within my reactor? I asked my AP physics teacher, but he was not certain this was the cause. I don't think off-gassing has anything to do with this, but it does seem like a possibility.

I would greatly appreciate any ideas that might explain this data.

I wasn't sure which "thread level" to tag this thread, so I put "high school" as I am a high school student. If this is wrong, please let me know.

Thanks,

Kuba

Last edited: