- #1

Simon666

- 93

- 0

Hi, I've gotten the conjugate gradient method to work for solving my matrix equation:

http://en.wikipedia.org/wiki/Conjugate_gradient_method

Right now I'm experimenting with the preconditioned version of it. For a certain preconditioner however I'm finding that

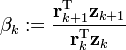

is zero, so no proper update is happening and hence no further minimizing of residuals occurs. Any idea what this means and what the best search direction (new p(k+1)) would then be?

http://en.wikipedia.org/wiki/Conjugate_gradient_method

Right now I'm experimenting with the preconditioned version of it. For a certain preconditioner however I'm finding that

is zero, so no proper update is happening and hence no further minimizing of residuals occurs. Any idea what this means and what the best search direction (new p(k+1)) would then be?

Last edited by a moderator: