weezy

- 92

- 5

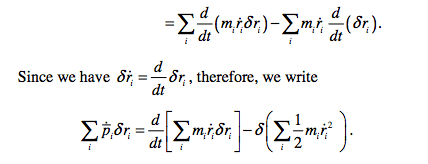

This link shows us how to derive Hamilton's generalised principle starting from D'Alembert's principle. While I had no trouble understanding the derivation I am stuck on this particular step.

I can't justify why ## \frac{d}{dt} \delta r_i = \delta [\frac{d}{dt}r_i] ##. This is because if I consider ##\delta \dot r_i## to be spatial variation in velocity of a particle as I shift my origin keeping time constant, doesn't it stay the same i.e. doesn't ##\delta \dot r_i = 0##?

Also if I assume that throughout a large section of the path ##\delta r_i = Constant## don't I get ## \frac{d}{dt} \delta r_i = 0 ? ##

Is this supposed to have a non-zero value or are we simply playing with 0's here?

Edit : If I assume ## \delta ## to act like an operator on ## r ## I don't see a problem arising as we've done in Quantum mechanics for commuting operators by interchanging their order. Can we say the same for this?

I can't justify why ## \frac{d}{dt} \delta r_i = \delta [\frac{d}{dt}r_i] ##. This is because if I consider ##\delta \dot r_i## to be spatial variation in velocity of a particle as I shift my origin keeping time constant, doesn't it stay the same i.e. doesn't ##\delta \dot r_i = 0##?

Also if I assume that throughout a large section of the path ##\delta r_i = Constant## don't I get ## \frac{d}{dt} \delta r_i = 0 ? ##

Is this supposed to have a non-zero value or are we simply playing with 0's here?

Edit : If I assume ## \delta ## to act like an operator on ## r ## I don't see a problem arising as we've done in Quantum mechanics for commuting operators by interchanging their order. Can we say the same for this?