kostoglotov

- 231

- 6

The exercise is: (b) describe all the subspaces of D, the space of all 2x2 diagonal matrices.

I just would have said I and Z initially, since you can't do much more to simplify a diagonal matrix.

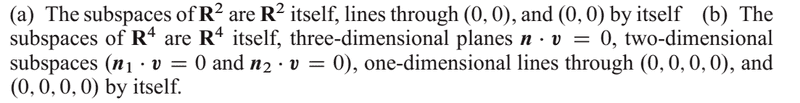

The answer given is here, relevant answer is (b):

Imgur link: http://i.imgur.com/DKwt8cN.png

I cannot understand how D is R^4, let alone the rest of the answer. I kind of get why there'd be orthogonal subspaces in that case, since it's diagonal...but that's just grasping at straws.

I can see how we might take the columns of D and form linear combinations from them, but those column vectors are in R^2

I just would have said I and Z initially, since you can't do much more to simplify a diagonal matrix.

The answer given is here, relevant answer is (b):

Imgur link: http://i.imgur.com/DKwt8cN.png

I cannot understand how D is R^4, let alone the rest of the answer. I kind of get why there'd be orthogonal subspaces in that case, since it's diagonal...but that's just grasping at straws.

I can see how we might take the columns of D and form linear combinations from them, but those column vectors are in R^2