- #1

- 67,053

- 19,867

- TL;DR Summary

- There has been a fundamental improvement in Voice Recognition over the past couple of decades. What was the key to these breakthroughs?

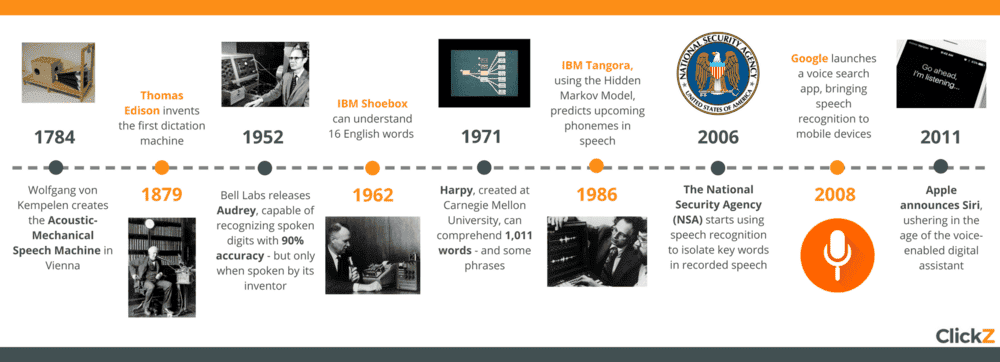

I remember about 20 years ago a colleague had to start using the Dragon Voice Recognition software on his EE design PC because he had developed really bad carpal tunnel pain, and he had to train the software to recognize his voice and limited phrases. That was the state of the art not too long ago.

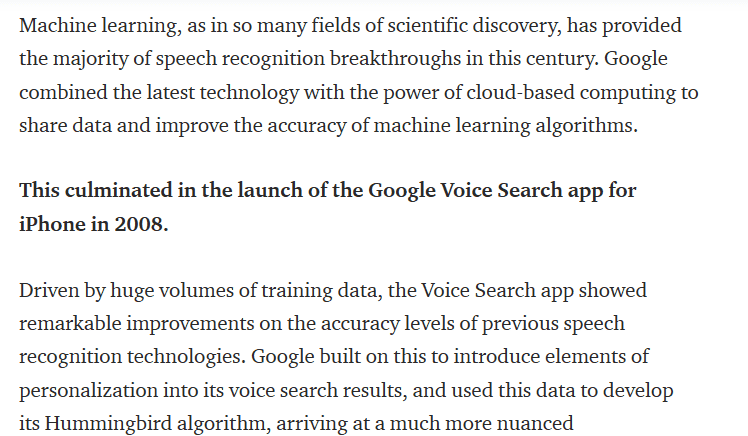

But now, Voice Recognition software has advanced to the point where multiple speakers can speak at normal speed and devices like cellphones and Alexa and Siri can usually get the interpretation correct. What has led to this huge step? I remember studying Voice Recognition algorithyms back in the early Dragon days, and marvelling at how complex things like phoneme recognition were. Were the big advances due mostly to increased computing power? Or some other adaptive learning techniques?

This article seems to address part of my question, but I'm still not understanding the fundamental leap that got us from Dragon to Alexa... Thanks for your insights.

But now, Voice Recognition software has advanced to the point where multiple speakers can speak at normal speed and devices like cellphones and Alexa and Siri can usually get the interpretation correct. What has led to this huge step? I remember studying Voice Recognition algorithyms back in the early Dragon days, and marvelling at how complex things like phoneme recognition were. Were the big advances due mostly to increased computing power? Or some other adaptive learning techniques?

This article seems to address part of my question, but I'm still not understanding the fundamental leap that got us from Dragon to Alexa... Thanks for your insights.