I figured out the problem. I'm going to write the resolution for the benefits of many others who have the same confusion as me and for my own future reference too.

Happiness said:

They did not show how the theorem (5.49) or (4.198) could be applied to a linear combination of eigenfunctions. One eigenfunction one at a time, yes. But otherwise, no. I don't see how. And I made clear where my confusion is: ##\lambda_1\psi_1+\lambda_2\psi_2\neq k(\psi_1+\psi_2)##, for any constant ##k##.

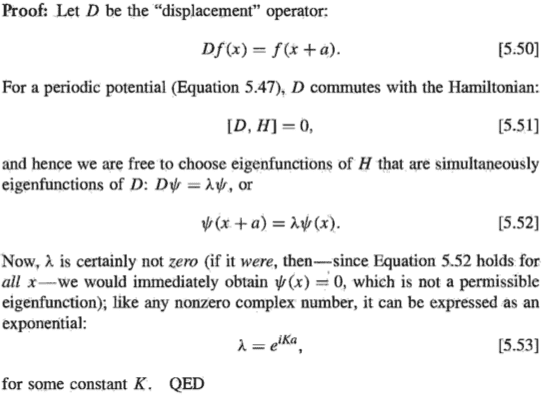

This is the reason for confusion. Suppose ##\psi_1## and ##\psi_2## are separately a solution to the Schrondinger equation [5.48]. Then any linear combination ##\psi=c_1\psi_1+c_2\psi_2## will also be a solution to the Schrondinger equation [5.48].

Then by Bloch's theorem, ##\psi=c_1\psi_1+c_2\psi_2## will also satisfy [5.49]: $$\psi(x+a)=e^{iKa}\psi(x)$$ But in general, ##\psi_1## and ##\psi_2## may have different ##K##. In other words, ##\psi_1(x+a)=e^{iK_1a}\psi_1## and ##\psi_2(x+a)=e^{iK_2a}\psi_2##, but ##K_1\neq K_2## (mod ##\frac{2\pi}{a}##). Then, there won't be a common factor to factorise. Instead, we will end up with $$\psi(x+a)=e^{iK_1a}c_1\psi_1(x)+e^{iK_2a}c_2\psi_2(x)\neq e^{iKa}\psi(x)$$

As a result, [5.49] cannot be satisfied, contradicting Bloch's theorem.

So it seems that Bloch's theorem only works for ##\psi_1## alone and ##\psi_2## alone but not a linear combination of them. This has been the main message in all my replies, so far.

And it turns out I was right in this all along. Bloch's theorem indeed doesn't work for any arbitrary linear combination: it works for some linear combinations but not for all linear combinations.

You may revisit Griffiths' words again:

You could blame it on his phrasing. What Bloch's theorem is saying is that

there exist some solutions to the Schrondinger equation [5.48] that satisfy the condition [5.49]. It is not saying all solutions to the Schrondinger equation [5.48] will satisfy the condition [5.49].

I have spent a lot of time thinking about the resolution to this problem, only to realize that I had the wrong interpretation of Bloch's theorem. I wish Griffiths could have phrased this better, like how the mathematicians do it clearly, with key words like "for every", "there exists one", etc. This would have communicated the right idea across to the readers the first time, and avoid ambiguities and confusion and wasting time trying to figure out what went wrong.

It's also important to introduce the notations ##\psi_{\lambda_1}## and ##\psi_{\lambda_2}##, and differentiate them with ##\psi_1## and ##\psi_2##, because usually ##\psi_1## and ##\psi_2## are reserved to denote orthonormal eigenfunctions of H. One question you may be pondering is "why do B&J express ##\psi## only as a sum of 2 linearly independent solutions (4.186), and why not as a sum of 3 or more terms?" Certainly, H generally have more than 2 orthonormal eigenfunctions. So why stop at 2?

It's because we are solving the Schrodinger equation at a certain value of E. And for a fixed value of E, the solution space is spanned by at most two linearly independent functions, since the Schrodinger equation is a second-order differential equation and would hence introduce two constants to the general solution. These two constants can be determined from ##\psi(x, 0)## and ##\frac{\partial\psi}{\partial t}(x, 0)##, giving us the solution to a particular scenario.

But why must we fix the value of E? It's because the K in Bloch's condition [5.49] may depend on E. So to make K truly constant, we should fix the value of E. (Of course, one needs to spend some time thinking over it to understand it for himself.)

Going back to the notations ##\psi_{\lambda_1}## and ##\psi_{\lambda_2}##. They are the eigenfunctions of D, with eigenvalues ##\lambda_1## and ##\lambda_2## respectively.

When you look at Griffiths' proof:

You may think that since D and H commute, an eigenfunction of H, such as ##\psi_1##, is also an eigenfunction of D. But this is not true. Commutativity only tells us that there exist functions that are eigenfunctions of both D and H. So if ##\psi_1## are ##\psi_2## linearly independent eigenfunctions of the same energy, then ##\psi_{\lambda_1}=c_1\psi_1+c_2\psi_2##, for some ##c_1## and ##c_2##. But in general, ##\psi_{\lambda_1}\neq\psi_1##. Similarly, ##\psi_{\lambda_2}=c_1'\psi_1+c_2'\psi_2##, for some ##c_1'## and ##c_2'##.

Take a look at the example following Griffiths' proof:

Do you see why in [5.60] there is a common factor ##e^{-iKa}## even though I just said Bloch's theorem only works for one eigenfunction one at a time? In other words, could you explain why ##\sin k(x+a)## and ##\cos k(x+a)## have the same factor ##e^{-iKa}##? Why not ##e^{-iK_1a}## for ##\sin k(x+a)## and ##e^{-iK_2a}## for ##\cos k(x+a)##? How do we tell in what situation we should use the same ##K## and in what situation we should use ##K_1## and ##K_2##, like B&J's (4.196) below?

Happiness said:

The key is to understand that we apply Bloch's theorem to an eigenfunction of D, not of H. In other words, ##\psi(x+a)=e^{iKa}\psi_{\lambda_1}## but ##\psi(x+a)\neq e^{iKa}\psi_1##. In the above example, ##\sin(kx)## and ##\cos(kx)## are eigenfunctions of H, ie., ##\psi_1=\sin(kx)## and ##\psi_2=\cos(kx)##. But since ##\sin(kx)## and ##\cos(kx)## are eigenfunctions of the same energy, ##A\sin(kx)+B\cos(kx)## is also an eigenfunction of the same energy. Then, since D and H commute, out of all the eigenfunctions of H, one of them must also be an eigenfunction of D. In other words, there exist some values of ##A## and ##B## such that ##A\sin(kx)+B\cos(kx)=\psi_{\lambda_1}##. And this is [5.59] (see above), ##\psi(x)## being an eigenfunction of D, and ##A## and ##B## being certain values, not any arbitrary values. This is quite subtle, and requires some careful thought. [5.59] is presented by the book as the general solution. But when it is used with Bloch's theorem to form [5.60], we are searching from the pool of the general solution, a particular solution that is an eigenfunction of D, with A and B taking on certain values. Hence, after displacing to the left by ##a##, we have ##\psi_{\lambda_1}(x-a)=e^{-iK_1a}\psi_{\lambda_1}(x)=e^{-iK_1a}[A\sin(kx)+B\cos(kx)]##, which is [5.60] after substituting ##x## by ##(x+a)##.