- #1

shinobi20

- 267

- 19

- TL;DR Summary

- I wanted to clarify some things on the transformation of contravariant and covariant components of a tensor given the fact that I have viewed many articles and post here.

I have read many GR books and many posts regarding the title of this post, but despite that, I still feel the need to clarify some things.

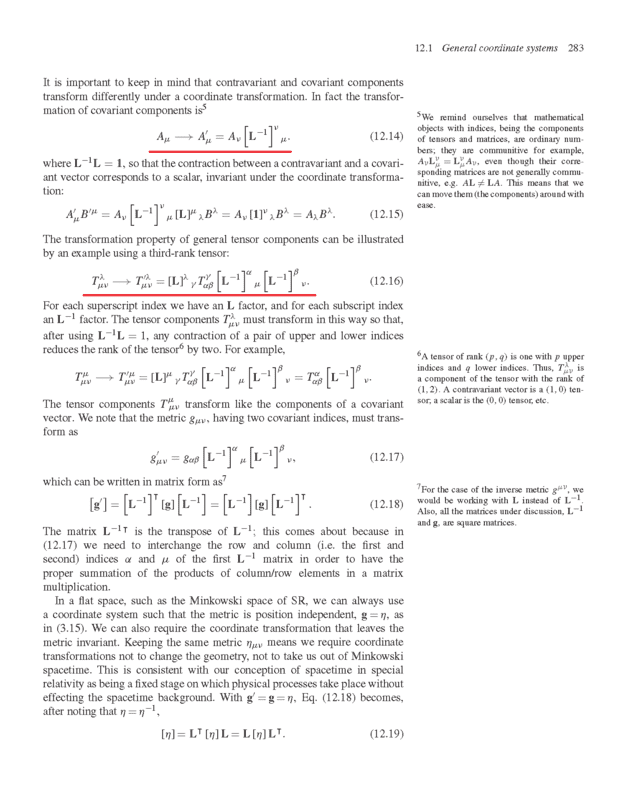

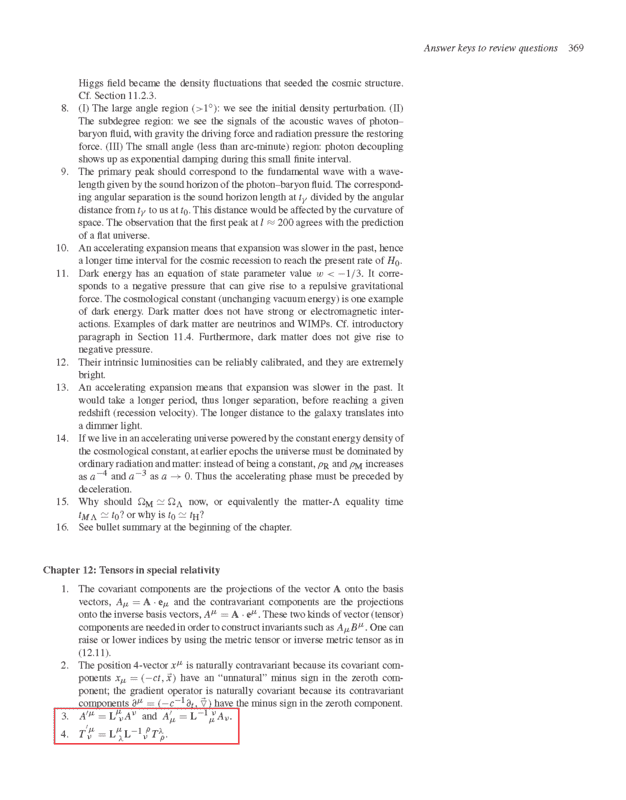

Based on my understanding, the contravariant component of a vector transforms as,

##A'^\mu = [L]^\mu~ _\nu A^\nu##

the covariant component of a vector transforms as,

##A'_\mu = [L^{-1}]^\nu~ _\mu A_\nu = [L]_\mu~^\nu A_\nu##

Note that ##~[L^{-1}]^\nu~_\mu = g^{\nu \alpha} g_{\mu \beta} [L]^\beta~_\alpha = [L]_\mu~^\nu##.

So based on this, it is my impression that all LT and inverse LT matrix must be written so that their indices are like this ##^\mu~ _\nu## and only when you want to rewrite the inverse LT as an LT then you realign ##_\mu~ ^\nu##.

This is what I think until I read Cheng's GR book.

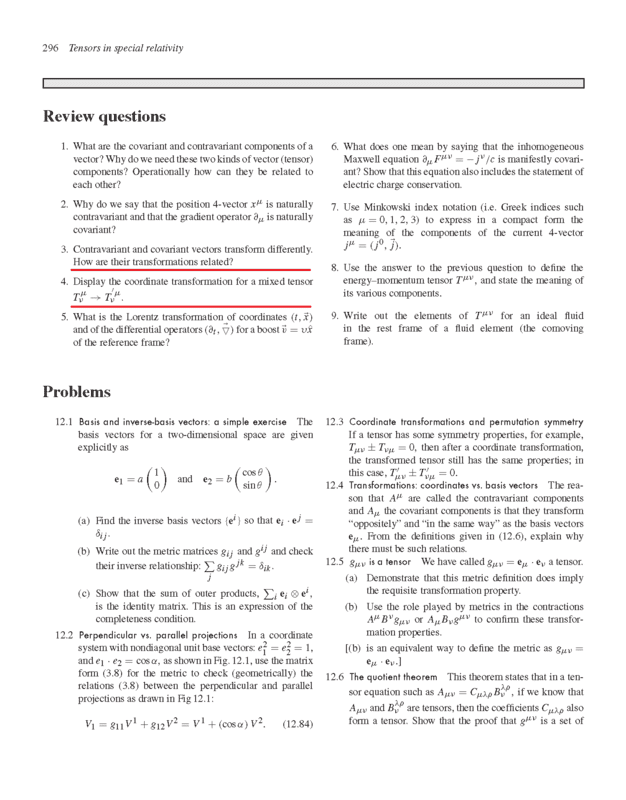

For the review question 3 & 4, why did he write the covariant transformation in that way ##_\mu~ ^\nu##?

Based on my understanding, the contravariant component of a vector transforms as,

##A'^\mu = [L]^\mu~ _\nu A^\nu##

the covariant component of a vector transforms as,

##A'_\mu = [L^{-1}]^\nu~ _\mu A_\nu = [L]_\mu~^\nu A_\nu##

Note that ##~[L^{-1}]^\nu~_\mu = g^{\nu \alpha} g_{\mu \beta} [L]^\beta~_\alpha = [L]_\mu~^\nu##.

So based on this, it is my impression that all LT and inverse LT matrix must be written so that their indices are like this ##^\mu~ _\nu## and only when you want to rewrite the inverse LT as an LT then you realign ##_\mu~ ^\nu##.

This is what I think until I read Cheng's GR book.

For the review question 3 & 4, why did he write the covariant transformation in that way ##_\mu~ ^\nu##?