Cloruro de potasio

- 30

- 1

- TL;DR

- Is it possible that the trapezoid method converges faster than the simpson method?

Good Morning,

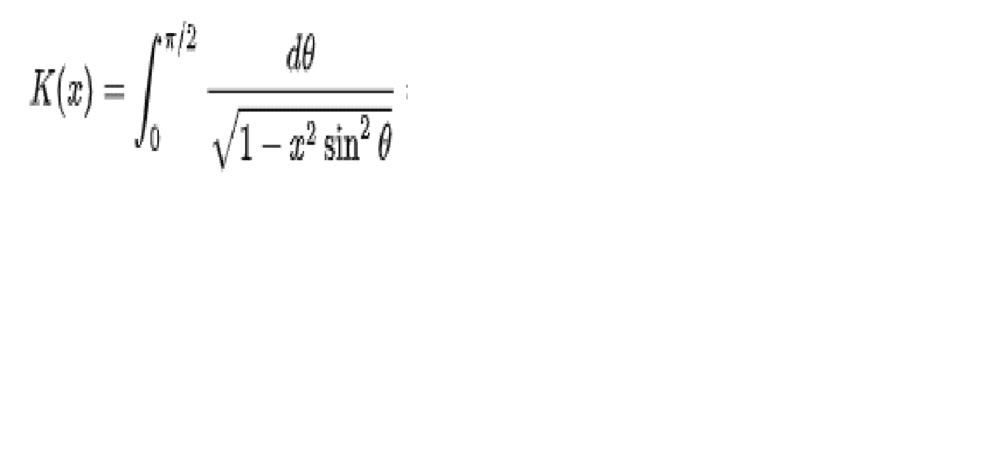

I have been doing computer practices in C ++, and for an integration practice, the trapezoid method converges faster than the Simpson method. The function to be integrated is a first class elliptical integral of the form:

Where k is bounded between [0,1). I have been thinking about the reason for this fact, and one of the hypotheses I have is that both the second derivative (which is used to determine the error level of the trapezoid method), and the fourth (which is used to determine the Simpson method error level), diverge in pi / 2, so it is not possible to calculate the maximum error level.

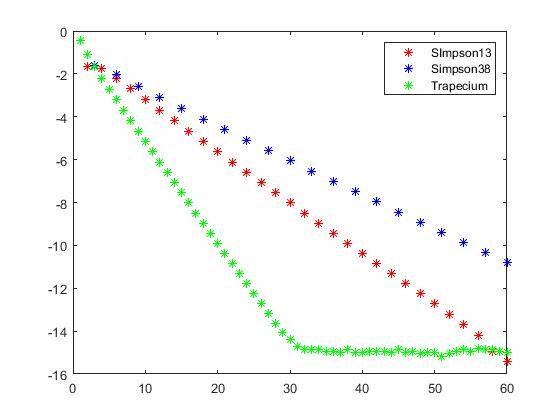

This is the graph that I get for the decimal logarithm of the error vs the number of intervals used:

Does anyone know why this happens?

I have been doing computer practices in C ++, and for an integration practice, the trapezoid method converges faster than the Simpson method. The function to be integrated is a first class elliptical integral of the form:

Where k is bounded between [0,1). I have been thinking about the reason for this fact, and one of the hypotheses I have is that both the second derivative (which is used to determine the error level of the trapezoid method), and the fourth (which is used to determine the Simpson method error level), diverge in pi / 2, so it is not possible to calculate the maximum error level.

This is the graph that I get for the decimal logarithm of the error vs the number of intervals used:

Does anyone know why this happens?