Homework Help Overview

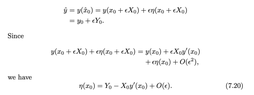

The discussion revolves around Taylor's Theorem and the use of big O notation, specifically why the notation appears as +O(ε) rather than -O(ε). Participants are exploring the implications of this notation in the context of error analysis as ε approaches zero.

Discussion Character

- Conceptual clarification, Mathematical reasoning

Approaches and Questions Raised

- The original poster attempts to understand the derivation of the equation and the reasoning behind the use of +O(ε). Some participants provide insights into the conventions of O-notation and discuss the implications of using positive versus negative signs in this context.

Discussion Status

Participants are actively engaging with the concepts, offering explanations about the meaning of O-notation and its conventional use. There is a focus on clarifying the mathematical reasoning behind the notation, with no explicit consensus reached yet.

Contextual Notes

Some participants express unfamiliarity with the text being referenced, indicating potential gaps in shared understanding or terminology.