Alien8

- 77

- 1

Bell's 1971 derivation

The following is based on page 37 of Bell's Speakable and Unspeakable (Bell, 1971), the main change being to use the symbol ‘E’ instead of ‘P’ for the expected value of the quantum correlation. This avoids any implication that the quantum correlation is itself a probability.

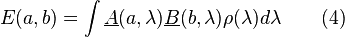

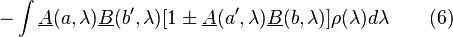

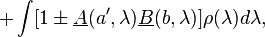

We start with the standard assumption of independence of the two sides, enabling us to obtain the joint probabilities of pairs of outcomes by multiplying the separate probabilities, for any selected value of the "hidden variable" λ. λ is assumed to be drawn from a fixed distribution of possible states of the source, the probability of the source being in the state λ for any particular trial being given by the density function ρ(λ), the integral of which over the complete hidden variable space is 1. We thus assume we can write:

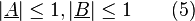

where A and B are the average values of the outcomes. Since the possible values of A and B are −1, 0 and +1, it follows that:

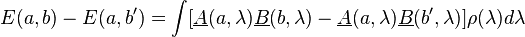

Then, if a, a′, b and b′ are alternative settings for the detectors,

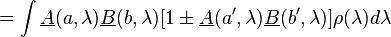

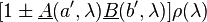

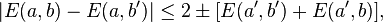

Then, applying the triangle inequality to both sides, using (5) and the fact that

as well as

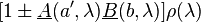

as well as

are non-negative we obtain

are non-negative we obtain

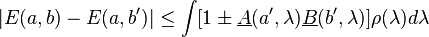

or, using the fact that the integral of ρ(λ) is 1,

which includes the CHSH inequality.

--- END QUOTE

http://en.wikipedia.org/wiki/CHSH_inequality#Derivation_of_the_CHSH_inequality1. "We start with the standard assumption of independence of the two sides, enabling us to obtain the joint probabilities of pairs of outcomes by multiplying the separate probabilities..."

Obtain the joint probability of what particular event?2. We see the premise from step (5) applied in step (6) which adds that \pm 1 into the equation, but without it, what is the underlying relation between four expectation values the equation describes? Would it be this: E(a,b) - E(a,b') = E(a,b) * E(a',b') - E(a,b') * E(a',b)?3. Step after (6), applying the triangle inequality to both sides. What is justification for this?

The following is based on page 37 of Bell's Speakable and Unspeakable (Bell, 1971), the main change being to use the symbol ‘E’ instead of ‘P’ for the expected value of the quantum correlation. This avoids any implication that the quantum correlation is itself a probability.

We start with the standard assumption of independence of the two sides, enabling us to obtain the joint probabilities of pairs of outcomes by multiplying the separate probabilities, for any selected value of the "hidden variable" λ. λ is assumed to be drawn from a fixed distribution of possible states of the source, the probability of the source being in the state λ for any particular trial being given by the density function ρ(λ), the integral of which over the complete hidden variable space is 1. We thus assume we can write:

where A and B are the average values of the outcomes. Since the possible values of A and B are −1, 0 and +1, it follows that:

Then, if a, a′, b and b′ are alternative settings for the detectors,

Then, applying the triangle inequality to both sides, using (5) and the fact that

or, using the fact that the integral of ρ(λ) is 1,

which includes the CHSH inequality.

--- END QUOTE

http://en.wikipedia.org/wiki/CHSH_inequality#Derivation_of_the_CHSH_inequality1. "We start with the standard assumption of independence of the two sides, enabling us to obtain the joint probabilities of pairs of outcomes by multiplying the separate probabilities..."

Obtain the joint probability of what particular event?2. We see the premise from step (5) applied in step (6) which adds that \pm 1 into the equation, but without it, what is the underlying relation between four expectation values the equation describes? Would it be this: E(a,b) - E(a,b') = E(a,b) * E(a',b') - E(a,b') * E(a',b)?3. Step after (6), applying the triangle inequality to both sides. What is justification for this?

Last edited by a moderator: