aaddcc

- 9

- 0

Hi All,

I am taking Dynamic Systems and Controls this semester for Mechanical Engineering. We are solving non homogeneous state space equations right now. This question is about a 2x2 state space differential equation that takes the form:

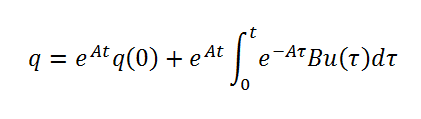

Where A and B are matrices, while u is an input (like sin(t), etc). We have written this to generally be:

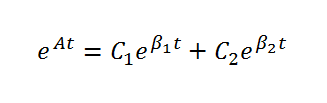

The situation I am wondering about, is when e^At is only real numbers (we use the laplace transform to solve this normally, so no sine or cosine), which means it is of the form:

Our professor agrees that C1*C2 = 0 is always true when it is of this form, which simplifies the convolution integral significantly. Additionally, in homeworks and lecture notes I noticed that C1*C1 = C1 and C2*C2=C2. I asked the Professor if this is always true, and he commented that he's not sure if it is always but that it was for the examples we did. What do you guys think, is that always true? If so, that simplifies the convolution integral even further. I am an engineer, not a mathematician, so my memory of DiffEq and proofs isn't so good, but I thought this was an interesting question

I am taking Dynamic Systems and Controls this semester for Mechanical Engineering. We are solving non homogeneous state space equations right now. This question is about a 2x2 state space differential equation that takes the form:

Where A and B are matrices, while u is an input (like sin(t), etc). We have written this to generally be:

The situation I am wondering about, is when e^At is only real numbers (we use the laplace transform to solve this normally, so no sine or cosine), which means it is of the form:

Our professor agrees that C1*C2 = 0 is always true when it is of this form, which simplifies the convolution integral significantly. Additionally, in homeworks and lecture notes I noticed that C1*C1 = C1 and C2*C2=C2. I asked the Professor if this is always true, and he commented that he's not sure if it is always but that it was for the examples we did. What do you guys think, is that always true? If so, that simplifies the convolution integral even further. I am an engineer, not a mathematician, so my memory of DiffEq and proofs isn't so good, but I thought this was an interesting question