QuebecRb

- 1

- 0

I have a set of two related queries relating to marginal pdfs:

i.How to proceed finding the marginal pdfs of two independent gamma distributions (X1 and X2) with parameters (α1,β) and (α2,β) respectively, given the transformation: Y1=X1/(X1+X2) and Y2=X1+X2.

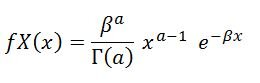

I am using the following gamma formula:

View attachment 3407

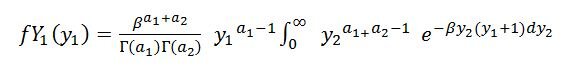

Having written the joint pdf and having applied the Jacobean, I have reached the final stage of writing the expression for the marginal (Y1):

View attachment 3408

but I cannot proceed further, obtaining the marginal pdf.

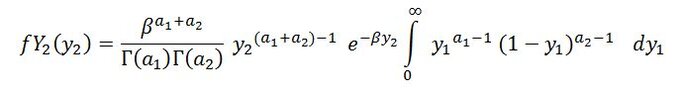

ii.Additionally, given the following transformations , Y1=X1/X2 and Y2=X2, I have written the expression for the marginal (Y2):

View attachment 3409

How do I find this marginal pdf?

Any enlightening answers would be appreciated.

i.How to proceed finding the marginal pdfs of two independent gamma distributions (X1 and X2) with parameters (α1,β) and (α2,β) respectively, given the transformation: Y1=X1/(X1+X2) and Y2=X1+X2.

I am using the following gamma formula:

View attachment 3407

Having written the joint pdf and having applied the Jacobean, I have reached the final stage of writing the expression for the marginal (Y1):

View attachment 3408

but I cannot proceed further, obtaining the marginal pdf.

ii.Additionally, given the following transformations , Y1=X1/X2 and Y2=X2, I have written the expression for the marginal (Y2):

View attachment 3409

How do I find this marginal pdf?

Any enlightening answers would be appreciated.