- #1

FrankJ777

- 140

- 6

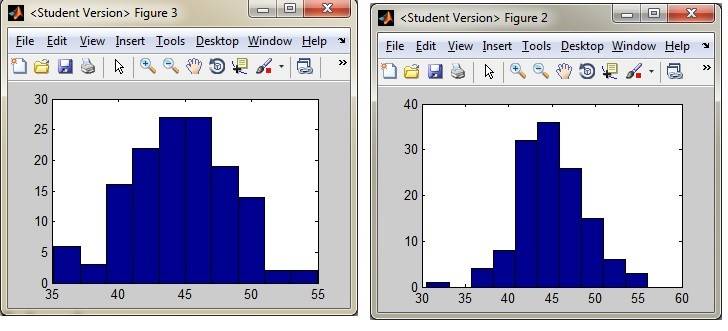

I'm trying to replicate a machine learning experiment in a paper.The experiment used several signal generators and "trains" a system to recognize the output from each one. They way this is do is by sampling the output of each generator, and then building histograms from each trial. Later you are supposed to be able to sample a generator randomly and use Maximum likelihood to determine which generator the output is from. This is done by comparing the samples to the histograms to determine the probability of each sample, and then multiplying the probabilities of each occurrence. I think the formula is this:

ML = (P=x1)(P=x2)(P=x3)...(P=xn)

So referring to the histograms below, if I received a sample set of say, [ 35, 40, 45 ,45, 46, 50] i would calculate the ML for each by finding the bin they belong to in each histogram to determine they're probability and multiplying each.

Assuming 134 samples:

ML (fig 3) = (5/134) * (15/134) * (26/134) * (26/134) * (26/134) * (15/134)

Assuming 123 samples:

ML (fig 2) = 0 * (7/123) * (35/123) * (35/123) * (35/123) * (15/123)

It seems that in the second case my ML would go to 0. But this doesn't seem like an unlikely event, it just didn't happen during the training phase. In fact it would seem that outliers could make the ML go to zero in that case. Even if I used the log of probabilities log(0) is indeterminate. So am I doing this correctly, or do I not understand the method. I'm new to this concept so I would appreciate if someone could shed some light on what I don't understand. Thanks.

Here's the link to the relevant paper by the way.

https://www.google.com/url?sa=t&rct...es.pdf&usg=AFQjCNFy9xsSBifMiHIqESYdQ494XOlvzQ

ML = (P=x1)(P=x2)(P=x3)...(P=xn)

So referring to the histograms below, if I received a sample set of say, [ 35, 40, 45 ,45, 46, 50] i would calculate the ML for each by finding the bin they belong to in each histogram to determine they're probability and multiplying each.

Assuming 134 samples:

ML (fig 3) = (5/134) * (15/134) * (26/134) * (26/134) * (26/134) * (15/134)

Assuming 123 samples:

ML (fig 2) = 0 * (7/123) * (35/123) * (35/123) * (35/123) * (15/123)

It seems that in the second case my ML would go to 0. But this doesn't seem like an unlikely event, it just didn't happen during the training phase. In fact it would seem that outliers could make the ML go to zero in that case. Even if I used the log of probabilities log(0) is indeterminate. So am I doing this correctly, or do I not understand the method. I'm new to this concept so I would appreciate if someone could shed some light on what I don't understand. Thanks.

Here's the link to the relevant paper by the way.

https://www.google.com/url?sa=t&rct...es.pdf&usg=AFQjCNFy9xsSBifMiHIqESYdQ494XOlvzQ