- #1

voila

- 59

- 6

Hi

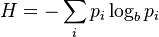

I'm having some trouble understanding Shannon entropy and its relation to "computer" bits (zeros and ones). Shannon entropy of a random variable is (assume b=2 so that we work in bits)

and everywhere I've read says it is "the number of bits on the average required to describe the random variable". Yet I can't seem to find a proper showing of the connection between the sentence and the equation.

I've also found an example (Example 1.1.2 on Elements of Information Theory, Cover & Thomas) which reads:

"Suppose we have a horse race with eight horses taking part. The probabilities of winning for the eight horses are (1/2, 1/4, 1/8, 1/16, 1/64, 1/64, 1/64, 1/64). The entropy of the horse race is (shows the calculation and equals) 2 bits. Suppose we wish to send a message indicating which horse won the race. One alternative is to send the index of the winning horse . This description requires 3 bits for any of the horses. But the probabilities are not uniform, so it makes sense to use shorter descriptions for the more probable horses, and viceversa, so that we achieve a lower average description length. For example, we could use:

0, 10, 110, 1110, 111100, 111101, 111110, 111111.

The average description length in this case is 2 bits."

Alright, in this case. But why does it have to be that case? Why can't I use a 1 for the second horse, 01 for the third and so on, reducing the average description length?

Appart from that, there's another example on the same book (Example 2.1.1) where we have a variable that takes the value 1 with probability p, and the value 0 with probability 1-p. If p is different to 1/2 (that is, if one outcome is more probable), the Shannon entropy is less than one bit. How can I describe it with less than one bit? It makes no sense. If it means "you could just not send a signal for the more probable outcome", wouldn't having a "not sending a signal" possibility effectively mean working with trits?

And once again, how dows Shannon entropy relate to all this??

I'm having some trouble understanding Shannon entropy and its relation to "computer" bits (zeros and ones). Shannon entropy of a random variable is (assume b=2 so that we work in bits)

and everywhere I've read says it is "the number of bits on the average required to describe the random variable". Yet I can't seem to find a proper showing of the connection between the sentence and the equation.

I've also found an example (Example 1.1.2 on Elements of Information Theory, Cover & Thomas) which reads:

"Suppose we have a horse race with eight horses taking part. The probabilities of winning for the eight horses are (1/2, 1/4, 1/8, 1/16, 1/64, 1/64, 1/64, 1/64). The entropy of the horse race is (shows the calculation and equals) 2 bits. Suppose we wish to send a message indicating which horse won the race. One alternative is to send the index of the winning horse . This description requires 3 bits for any of the horses. But the probabilities are not uniform, so it makes sense to use shorter descriptions for the more probable horses, and viceversa, so that we achieve a lower average description length. For example, we could use:

0, 10, 110, 1110, 111100, 111101, 111110, 111111.

The average description length in this case is 2 bits."

Alright, in this case. But why does it have to be that case? Why can't I use a 1 for the second horse, 01 for the third and so on, reducing the average description length?

Appart from that, there's another example on the same book (Example 2.1.1) where we have a variable that takes the value 1 with probability p, and the value 0 with probability 1-p. If p is different to 1/2 (that is, if one outcome is more probable), the Shannon entropy is less than one bit. How can I describe it with less than one bit? It makes no sense. If it means "you could just not send a signal for the more probable outcome", wouldn't having a "not sending a signal" possibility effectively mean working with trits?

And once again, how dows Shannon entropy relate to all this??