- #1

Umar

- 37

- 0

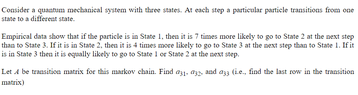

Hi! I have a question regarding making the transition matrix for the corresponding probabilities. The main problem I feel I have here is figuring out how to represent the probabilities in the question in the transition matrix. Like if something is 7 times more likely than something else.. Any help would be appreciated.

View attachment 6432

View attachment 6432

Attachments

Last edited: