saadhusayn

- 17

- 1

Say we have a matrix L that maps vector components from an unprimed basis to a rotated primed basis according to the rule x'_{i} = L_{ij} x_{j}. x'_i is the ith component in the primed basis and x_{j} the j th component in the original unprimed basis. Now x'_{i} = \overline{e}'_i. \overline{x} = \overline{e}'_i. \overline{e}_j x_{j}. Hence L_{ij} = \overline{e}'_i. \overline{e}_j. Thus the matrix equation relating the primed co-ordinate system to the unprimed one in \mathbb{R}^3 is

$$ \begin{pmatrix}x'_{1}\\ x'_{2}\\ x'_{3} \end{pmatrix} = \begin{pmatrix} \overline{e}'_1. \overline{e}_1 & \overline{e}'_1. \overline{e}_2 & \overline{e}'_1. \overline{e}_3\\ \overline{e}'_2. \overline{e}_1 & \overline{e}'_2. \overline{e}_2 & \overline{e}'_2. \overline{e}_1 \\ \overline{e}'_3. \overline{e}_1 & \overline{e}'_3. \overline{e}_2 & \overline{e}'_3. \overline{e}_3 \end{pmatrix}\begin{pmatrix}x_{1}\\ x_{2}\\ x_{3} \end{pmatrix} $$

Where the \overline{e}'_is and \overline{e}_js are unit basis vectors in the primed and unprimed co ordinate systems respectively.

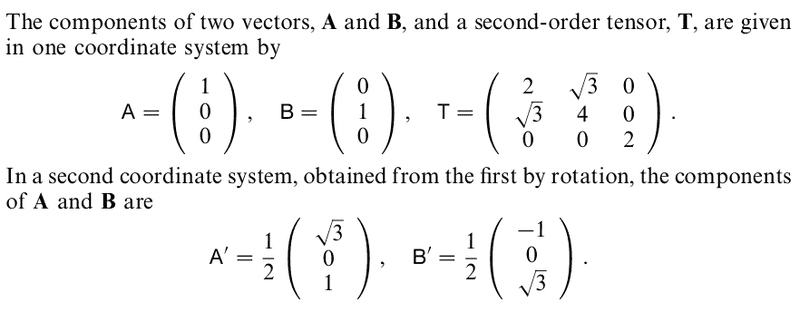

Now I tried to apply the above idea to the following situation (Riley, Hobson and Bence Chapter 26, Problem 2).

I took \mathbf{A} = \overline{e}_1 and \mathbf{B} = \overline{e}_2. It turns out that the matrix that transforms \mathbf{A} \rightarrow \mathbf{A}' and \mathbf{B} \rightarrow \mathbf{B}' is not the matrix that transforms the unprimed components to the primed components (that I used above) but the INVERSE (or transpose) of that matrix. I need to know where I am going wrong here. Thank you.

$$ \begin{pmatrix}x'_{1}\\ x'_{2}\\ x'_{3} \end{pmatrix} = \begin{pmatrix} \overline{e}'_1. \overline{e}_1 & \overline{e}'_1. \overline{e}_2 & \overline{e}'_1. \overline{e}_3\\ \overline{e}'_2. \overline{e}_1 & \overline{e}'_2. \overline{e}_2 & \overline{e}'_2. \overline{e}_1 \\ \overline{e}'_3. \overline{e}_1 & \overline{e}'_3. \overline{e}_2 & \overline{e}'_3. \overline{e}_3 \end{pmatrix}\begin{pmatrix}x_{1}\\ x_{2}\\ x_{3} \end{pmatrix} $$

Where the \overline{e}'_is and \overline{e}_js are unit basis vectors in the primed and unprimed co ordinate systems respectively.

Now I tried to apply the above idea to the following situation (Riley, Hobson and Bence Chapter 26, Problem 2).

I took \mathbf{A} = \overline{e}_1 and \mathbf{B} = \overline{e}_2. It turns out that the matrix that transforms \mathbf{A} \rightarrow \mathbf{A}' and \mathbf{B} \rightarrow \mathbf{B}' is not the matrix that transforms the unprimed components to the primed components (that I used above) but the INVERSE (or transpose) of that matrix. I need to know where I am going wrong here. Thank you.