Math Amateur

Gold Member

MHB

- 3,920

- 48

I am reading Andrew McInerney's book: First Steps in Differential Geometry: Riemannian, Contact, Symplectic ... and I am focused on Chapter 3: Advanced Calculus ... and in particular on Section 3.1: The Derivative and Linear Approximation ...

I am trying to fully understand Definition 3.1.1 and need help with an example based on the definition ...

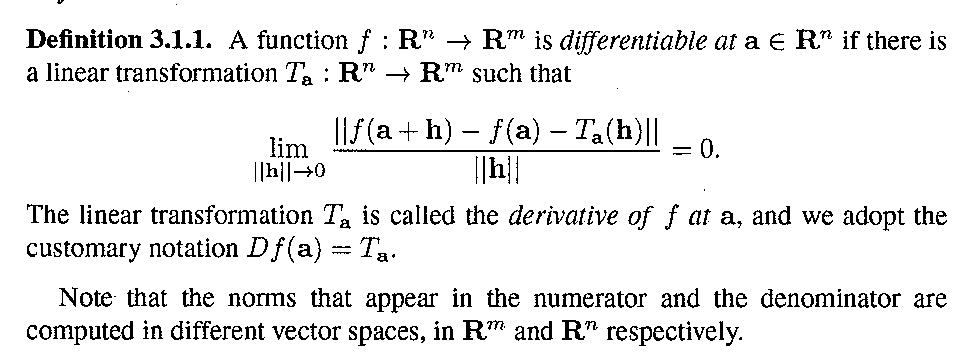

Definition 3.1.1 reads as follows:

I constructed the following example ...

Let ##f: \mathbb{R} \to \mathbb{R}^2 ##

such that ##f = ( f^1, f^2 )##

where ##f^1(x) = 2x## and ##f^2(x) = 3x + 1##

We wish to determine ##T_a(h)## ... We have ##f(a + h) = ( f^1(a + h), f^2(a + h) )= (2a + 2h, 3a + 3h +1 )##

and

##f(a ) = ( f^1(a ), f^2(a ) ) = (2a , 3a +1 )##

Now ... consider ... ##\displaystyle \lim_{ \mid \mid h \mid \mid \to 0} \frac{ \mid \mid f(a + h) - f(a) - T_a(h) \mid \mid }{ \mid \mid h \mid \mid } ####\Longrightarrow \displaystyle \lim_{ \mid \mid h \mid \mid \to 0} \frac{ \mid \mid (2a + 2h, 3a + 3h +1) - (2a, 3a + 1) - T_a(h) \mid \mid }{ \mid \mid h \mid \mid }####\Longrightarrow \displaystyle \lim_{ \mid \mid h \mid \mid \to 0} \frac{ \mid \mid ( 2h, 3h ) - T_a(h) \mid \mid }{ \mid \mid h \mid \mid }##... ... but how do I proceed from here ... ?

... can I take ##T_a (h) = T_a.h## ... but how do I justify this?Hope someone can help ...

Peter

I am trying to fully understand Definition 3.1.1 and need help with an example based on the definition ...

Definition 3.1.1 reads as follows:

I constructed the following example ...

Let ##f: \mathbb{R} \to \mathbb{R}^2 ##

such that ##f = ( f^1, f^2 )##

where ##f^1(x) = 2x## and ##f^2(x) = 3x + 1##

We wish to determine ##T_a(h)## ... We have ##f(a + h) = ( f^1(a + h), f^2(a + h) )= (2a + 2h, 3a + 3h +1 )##

and

##f(a ) = ( f^1(a ), f^2(a ) ) = (2a , 3a +1 )##

Now ... consider ... ##\displaystyle \lim_{ \mid \mid h \mid \mid \to 0} \frac{ \mid \mid f(a + h) - f(a) - T_a(h) \mid \mid }{ \mid \mid h \mid \mid } ####\Longrightarrow \displaystyle \lim_{ \mid \mid h \mid \mid \to 0} \frac{ \mid \mid (2a + 2h, 3a + 3h +1) - (2a, 3a + 1) - T_a(h) \mid \mid }{ \mid \mid h \mid \mid }####\Longrightarrow \displaystyle \lim_{ \mid \mid h \mid \mid \to 0} \frac{ \mid \mid ( 2h, 3h ) - T_a(h) \mid \mid }{ \mid \mid h \mid \mid }##... ... but how do I proceed from here ... ?

... can I take ##T_a (h) = T_a.h## ... but how do I justify this?Hope someone can help ...

Peter