- #1

Aliando

- 2

- 0

Hi, I'm working on a project to determine the distance a pedestrian is away from a single digital camera but having never done optics before I'm struggling to find the right equation to use. I'd really appreciate any help!

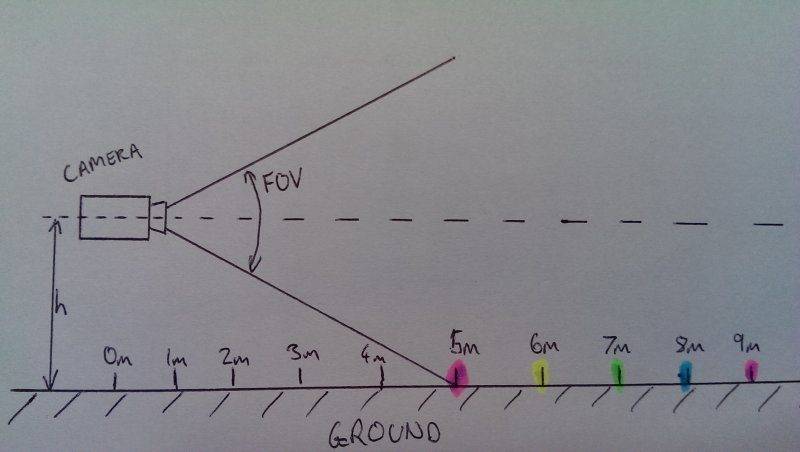

The method I am trying to use assumes you know the height of the camera above the ground, the inclination of the camera in relation to the ground (parallel with the ground I assume is easiest) and the pixel density of the digital camera used to capture the image.

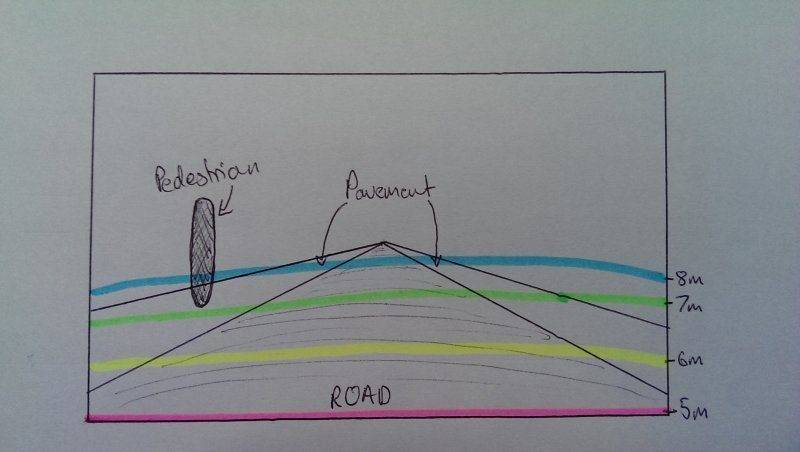

Then the pixel height of the pedestrians feet in the captured image should correlate to the distance the pedestrian is away from the camera.

Side View:

POV of Camera:

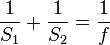

I have looked at using the thin lens equation

where S1 is the distance from the object to the lens, S2 is the distance from the lens to the image and f is the focal length but to no avail. I also have no idea how to go about relating the distance of the pedestrian to the pixel height/distance up captured image.

where S1 is the distance from the object to the lens, S2 is the distance from the lens to the image and f is the focal length but to no avail. I also have no idea how to go about relating the distance of the pedestrian to the pixel height/distance up captured image.

Thanks in advance! I'm really stuck

The method I am trying to use assumes you know the height of the camera above the ground, the inclination of the camera in relation to the ground (parallel with the ground I assume is easiest) and the pixel density of the digital camera used to capture the image.

Then the pixel height of the pedestrians feet in the captured image should correlate to the distance the pedestrian is away from the camera.

Side View:

POV of Camera:

I have looked at using the thin lens equation

Thanks in advance! I'm really stuck