Danny89

- 3

- 0

Hey,

I have a linear algebra exam tomorrow and am finding it hard to figure out how to calculate an orthogonal projection onto a subspace.

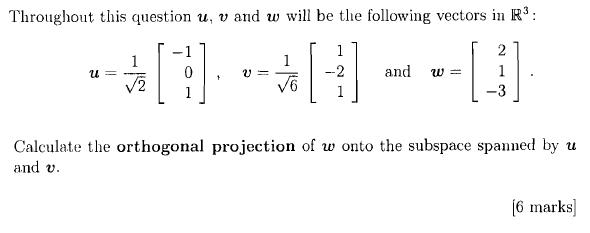

Here is the actual question type i am stuck on:

I have spent ages searching the depths of google and other such places for a solution but with no look. I am really stuck and it would be greatly appreciated if someone could maybe give me a helping hand and try explain this to me.

Thanks.

I have a linear algebra exam tomorrow and am finding it hard to figure out how to calculate an orthogonal projection onto a subspace.

Here is the actual question type i am stuck on:

I have spent ages searching the depths of google and other such places for a solution but with no look. I am really stuck and it would be greatly appreciated if someone could maybe give me a helping hand and try explain this to me.

Thanks.