- #1

TheBigDig

- 65

- 2

- Homework Statement

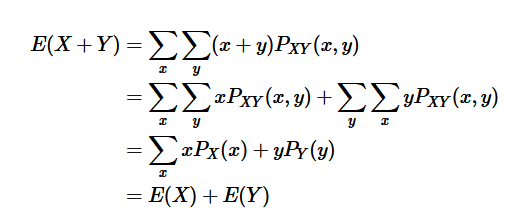

- Prove that E[aX+bY] = aE[X]+bE[Y]

- Relevant Equations

- [tex]E[X] = \sum_{x=0}^{\infty} x p(x)[/tex]

Apologies if this isn't the right forum for this. In my stats homework we have to prove that the expected value of aX and bY is aE[X]+bE[Y] where X and Y are random variables and a and b are constants. I have come across this proof but I'm a little rusty with summations. How is the jump from the second line to the third line made?