- #1

- 3,720

- 1,823

Just recently, I finally upgraded my 7+ year old computer. I was able to find a good deal on a HP Omen, with a Geforce RTX 2060 graphics card.

So, not only was I able to upload the latest build of Blender, but I was able to go back and tweak some renders I had done with the old computer.

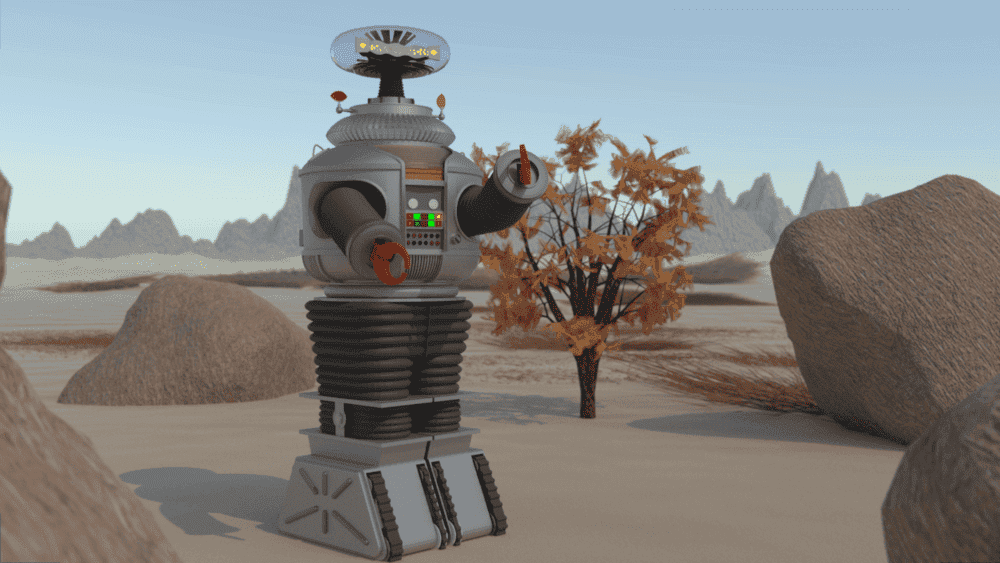

For example, I had done a render of the robot from Lost in Space a while back. And while it wasn't necessarily a bad render, it could have used some extra work. The problem was, that with my old computer, to do the type of material adjustments, etc. that would give the render that something little more required doing test renders in order to see the end result, and this could take a long time on the old system. This made it so that I just wasn't that enthusiastic about making those small adjustments.

Below is the newer version.

The robot model itself is unchanged, but the textures have been tweaked. The scene itself has also has been filled out a bit. The original just had the crags on the horizon. The tree/shrub, foreground rocks and ground cover are new. I also added a bit of focal blur, and a "haze" to give the scene a bit more depth.

So, not only was I able to upload the latest build of Blender, but I was able to go back and tweak some renders I had done with the old computer.

For example, I had done a render of the robot from Lost in Space a while back. And while it wasn't necessarily a bad render, it could have used some extra work. The problem was, that with my old computer, to do the type of material adjustments, etc. that would give the render that something little more required doing test renders in order to see the end result, and this could take a long time on the old system. This made it so that I just wasn't that enthusiastic about making those small adjustments.

Below is the newer version.

The robot model itself is unchanged, but the textures have been tweaked. The scene itself has also has been filled out a bit. The original just had the crags on the horizon. The tree/shrub, foreground rocks and ground cover are new. I also added a bit of focal blur, and a "haze" to give the scene a bit more depth.