Justice Hunter

- 98

- 7

Wasn't really sure how to ask this question since it's kind of niche.

I have 3 cameras, which have the property that they occupy no physical space, and have no lens. They are defined only by the angle of their view.

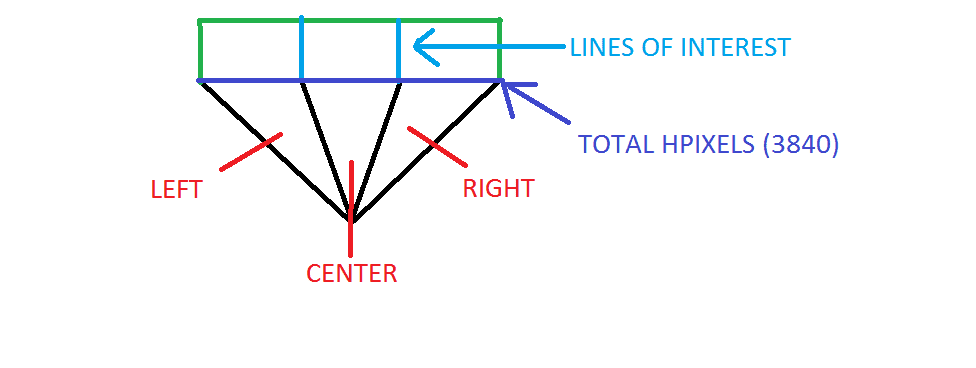

Now these cameras capture a certain field and take an image. The cameras will always creates an image that's 1280 pixels in length, and 720 pixels in height. The other two cameras Left and Right, are rotated along the center cameras axis by any arbitrary amount, and create an elongated image of the center camera. So when the Left and Right cameras are placed and then rotated at the same Angle of View as the center camera, the amount of pixels the field of view extends to is 3x1280, which would be 3840. Below is an illustration that reflects this.

Additionally, if the Left and Right cameras are placed at 0 rotation from the center camera, the minimum Field of View is 1280pixels.

So that's the setup. Now the issue arises when i want to find out the relationship between the light blue lines (Lines of Interest) and the angle of view of the L and R cameras.. Essentially, as the angle of the Left and Right cameras increase or decrease, while the center cameras angle remains the same, the position of the Lines of Interest also changes, as well as the total amount of the field of view (The purple line).

My problem is finding out the overlap in terms of pixels, when the angle of view of the Left and Right cameras change. So for example if the L and R cameras are rotated 10degrees from the center camera, the position of those blue lines changes to some number between 0 and 1280 (0 being no change in angle, and 1280 being a change in angle that's equal to the angle of the center camera.

I searched online for anything remotly close to this, but many of the things i found online had to deal with cameras that took up real space, which involved real physics behaviour about light and lens's, which is not what I'm looking for. I'm looking for a much more mathematical explanation which are mostly about relationships to triangles.

I have 3 cameras, which have the property that they occupy no physical space, and have no lens. They are defined only by the angle of their view.

Now these cameras capture a certain field and take an image. The cameras will always creates an image that's 1280 pixels in length, and 720 pixels in height. The other two cameras Left and Right, are rotated along the center cameras axis by any arbitrary amount, and create an elongated image of the center camera. So when the Left and Right cameras are placed and then rotated at the same Angle of View as the center camera, the amount of pixels the field of view extends to is 3x1280, which would be 3840. Below is an illustration that reflects this.

Additionally, if the Left and Right cameras are placed at 0 rotation from the center camera, the minimum Field of View is 1280pixels.

So that's the setup. Now the issue arises when i want to find out the relationship between the light blue lines (Lines of Interest) and the angle of view of the L and R cameras.. Essentially, as the angle of the Left and Right cameras increase or decrease, while the center cameras angle remains the same, the position of the Lines of Interest also changes, as well as the total amount of the field of view (The purple line).

My problem is finding out the overlap in terms of pixels, when the angle of view of the Left and Right cameras change. So for example if the L and R cameras are rotated 10degrees from the center camera, the position of those blue lines changes to some number between 0 and 1280 (0 being no change in angle, and 1280 being a change in angle that's equal to the angle of the center camera.

I searched online for anything remotly close to this, but many of the things i found online had to deal with cameras that took up real space, which involved real physics behaviour about light and lens's, which is not what I'm looking for. I'm looking for a much more mathematical explanation which are mostly about relationships to triangles.