- #1

- 37,127

- 13,968

https://www.physicsforums.com/threads/new-lhc-results-2015-tuesday-dec-15-interesting-diphoton-excess.84798 and status from Monday

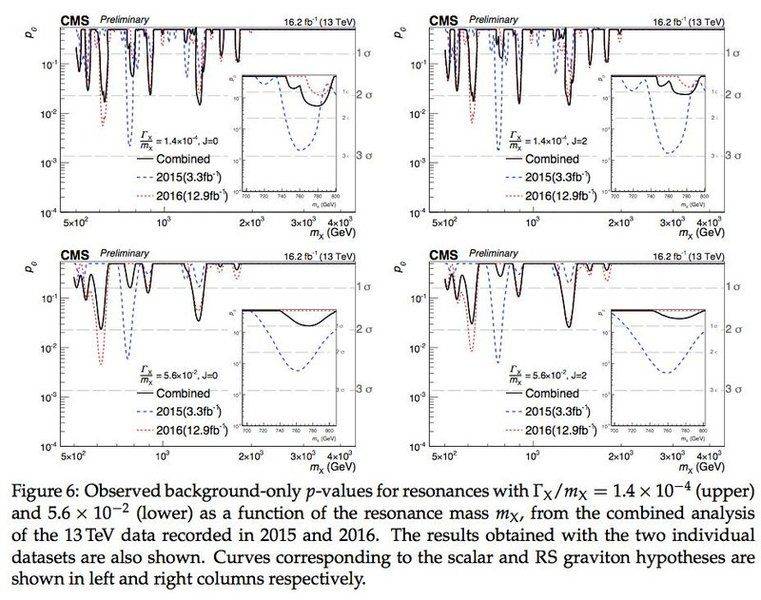

CMS released their conference note a bit earlier. They see absolutely nothing at the mass range where the excess appeared in 2015.

It is a bit curious that they removed events where both photons were detected in the endcap. This was shown in earlier analyses - why not this year?

Nothing public from ATLAS so far.Summary plot, a peak in the data corresponds to a downwards spike (lower = more significant):

CMS released their conference note a bit earlier. They see absolutely nothing at the mass range where the excess appeared in 2015.

It is a bit curious that they removed events where both photons were detected in the endcap. This was shown in earlier analyses - why not this year?

Nothing public from ATLAS so far.Summary plot, a peak in the data corresponds to a downwards spike (lower = more significant):

Last edited by a moderator: