DoobleD said:

Ahh that makes sense ! I was going to ask then if f(1), f(2) etc. are the components of the "unitary" basis (1, 0, 0, ...), (0, 1, 0, ...), an so on (not necessarily in that order, f(-3) could be component of say the unit vector (0, 1, 0, ...)). But

@Mark44 answered f(1), f(2), ... are not components :

We can discuss some technicalities. First, there is the basic question "Is a component of a vector also a vector?"

In a physics textbook, one sees vectors "resolved" into components, which are themselves vectors. In another textbook, we might see a "component" of vector defined as the coefficient that appears when we express a vector as the sum of basis vectors. So if ##V = a1 E_1 + a2 E_2## with ##V,E_1,E_2## vectors and ##a1,a2## scalars, what are the "components" of ##V## ? Are they ##a1,a2## or are they ##a1 E_1, a2 E_2## ? And if ##V## is represented as an ordered tuple ##(a1,a2)## is the "a1" in this representation taken to mean precisely the scalar a1 or is it a short hand notation for ##a1 E_1##?

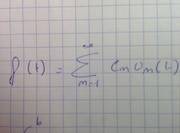

I don't have any axe to grind on those questions of terminology. I'll go along with the prevailing winds. But, for the moment, let's take the outlook of a physics textbook and speak of the "components" of a vector as being vectors. Suppose we want to resolve a function (considered as a vector) into other vectors. The notation "f(1)" denotes a real number, not a function. So you can't resolve a function (regarded as a vector) into a sum of other functions (regarded as vectors) only by using a set of scalars f(1),f(2),... To resolve f into other function, we can adopt the convention that "(f(1), f(2),f(3),...)" represents a sum of vectors. To do that we can define "f(k)" to denote the scalar f(k) times the function ##e_k(x)## where ##e_k(x)## is defined by: ##e_k(x) = 1## when ## x = k## and ##e_k(x) = 0## otherwise.

If you express f(x) as sum of functions defined by sines and cosines, then you are not using the functions ##e_k(x)## as the basis functions. So there is no reason to expect that a scalar such as f(1) has anything to do with the coefficients that appear when you expand f as sum of sines and cosines.

The dot product formula for the inner product assumes the two vectors being "dotted" are represented in the same orthonormal basis.

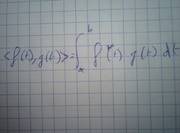

Then, why would the inner product be defined the way it is ? The inner product of f and g is an infinite sum of f(1)g(1) + f(2)g(2) + ... .

That's an intuitive way to think about it. To make a better analogy to the actual definition as in integral, you should put some dx's in that expression, unless you are talking about functions defined only on the natural numbers.

And I thought the inner product was the analog of the dot product, which is, for vectors A and B, a sum of a1b1 + a2b2 + ..., with a1, b1, a2, b2, ... components of the vectors A and B.

You are correct that (intuitively) the inner product (when defined as an integral) is an analogy to the dot product formula for vectors (which assumes the two vectors are represented in the same orthonormal basis).

Some mathematical technicalities are:

1) In pure mathematics, an inner product (not "the" inner product) has a abstract definition. It isn't defined by a formula.

2) The connection between the inner product on a vector space and the vectors is that the inner product is used to compute the "norm" of a vector (e.g. its "length").

3) In pure mathematics, vectors don't necessarily have a "norm". The abstract definition of a "vector space" doesn't require that vectors have a length. On the same vector space, it may be possible to define different "norms". (In the case of vector spaces of functions, we often see different norms defined.)

4) The set of scalars in a vector space isn't necessarily the set of real numbers. When we use the set of complex numbers as the scalars then we encounter a formula for a dot product that involves taking complex conjugates.

So, the computation of both operations is the same, and the inner product is a generalization of the dot product, but somehow in the case of inner product, what the operation multiplies are not vector-like components ?

The dot product formula doesn't multiply together "vector like components". It involves the multiplication of scalars.

You could write a formula for the dot product of two sums of vectors that involves the sum of dot-products of vectors. Is that what you mean by multiplying vector like components ?