- 37,402

- 14,234

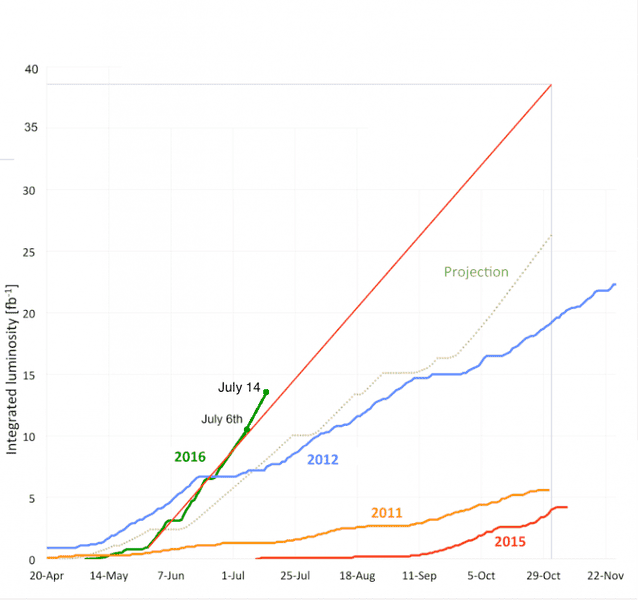

Performance in the last two weeks has been amazing. Monday->Monday set a new record with 3.1/fb collected in one week (that is nearly the same amount of data as the whole last year) while the LHC experiments could take data 80% of the time. About 12 weeks of data-taking remain for this year, 13.5/fb has been collected already (more than 3 times the 2015 dataset).

The LHC is now reliably reaching the design luminosity at the start of a run, and most runs are so long that they get dumped by the operators (instead of a technical issue) to get a new run - the number of protons goes down over time, after about 24 hours it gets more efficient to dump the rest and accelerate new protons.

The SPS vacuum issue won't get resolved this year, but there are still some clever ideas how to increase the collision rate a bit more.

The LHC is now reliably reaching the design luminosity at the start of a run, and most runs are so long that they get dumped by the operators (instead of a technical issue) to get a new run - the number of protons goes down over time, after about 24 hours it gets more efficient to dump the rest and accelerate new protons.

The SPS vacuum issue won't get resolved this year, but there are still some clever ideas how to increase the collision rate a bit more.