MickN

- 8

- 0

Hi there,

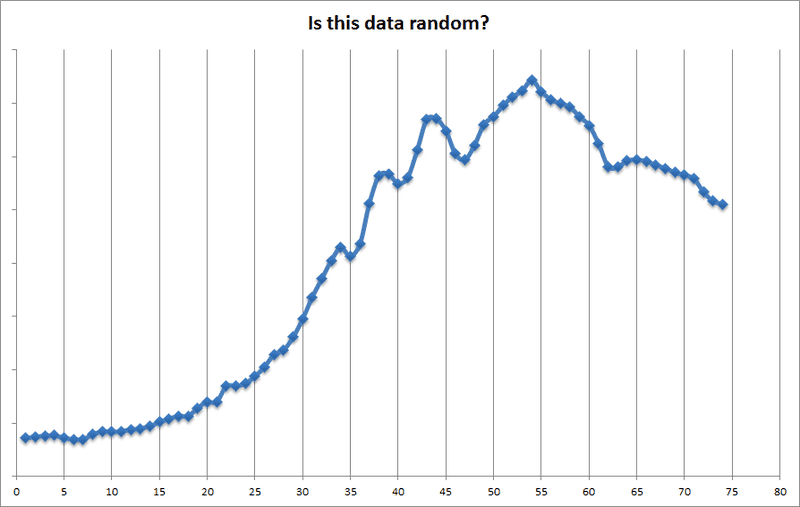

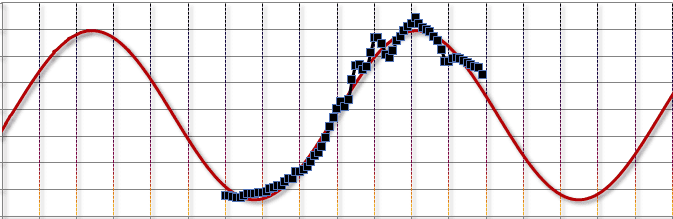

I have recently come across some data that is supposed to be random, but I don't think it is. I graphed it out and it sure doesn't look random. I also ran a couple of statistical tests such as the runs test, and they all say "not random." Visually, the data looks like a piece of a complex waveform (I've done a lot of work with sound synthesis, and the data looks like something I'd see in the window of an FM synthesizer). If the data in question is not random, it is really very important. If the data is forming a complex waveform, the implications are profound. I am not revealing what the data is, right now, because anyone who knows what it is will say "impossible," and their brains will turn off. I will tell you this about the data: It consists of 74 numbers only. Each number is supposed to be the sum of an extremely large number of random, independent factors. A reasonable metaphor would be, imagine you have a million dice. Imagine you - or something - rolls them all, every second, for the entire day, and sums up the results of all them. Imagine doing that for 74 days. That's what this data is like. I think data like that should obey the central limit theorem and be normally distributed. This data is not - not even close. And when you graph it out, this is what it looks like:

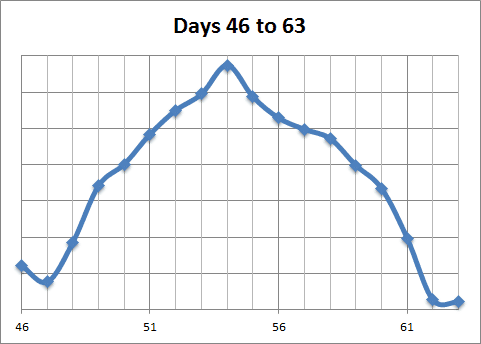

I'd really like to know if people agree with me that this data is not random, and if they agree it looks like part of a waveform. Of course it doesn't have to be both. The not randomness of it is more obvious when you focus in on one period at a time. For instance, look at days 46 to 63, below. See how the first half (days 46 to 54) is a mirror image of the second half (days 54 to 63):

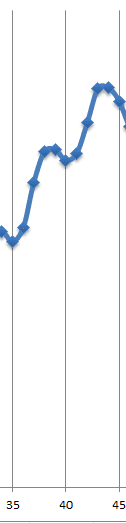

Would that happen randomly? Now look at days 34 to 45.

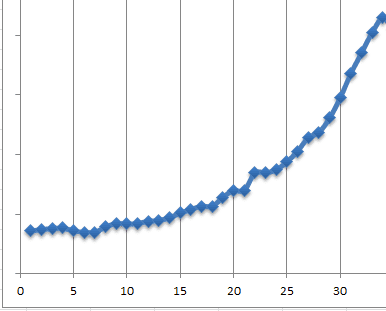

See how it makes two slanted S shapes? And finally, check out the first 34 days:

That sure doesn't look like it's going up at a random rate. It looks like a steady, smooth curve. And the whole thing comes real close to a simple sine wave:

Please let me know what you think. After a few people have responded, I'll reveal what the data is, and everybody will say "holy @#&*" Thanks :)

I have recently come across some data that is supposed to be random, but I don't think it is. I graphed it out and it sure doesn't look random. I also ran a couple of statistical tests such as the runs test, and they all say "not random." Visually, the data looks like a piece of a complex waveform (I've done a lot of work with sound synthesis, and the data looks like something I'd see in the window of an FM synthesizer). If the data in question is not random, it is really very important. If the data is forming a complex waveform, the implications are profound. I am not revealing what the data is, right now, because anyone who knows what it is will say "impossible," and their brains will turn off. I will tell you this about the data: It consists of 74 numbers only. Each number is supposed to be the sum of an extremely large number of random, independent factors. A reasonable metaphor would be, imagine you have a million dice. Imagine you - or something - rolls them all, every second, for the entire day, and sums up the results of all them. Imagine doing that for 74 days. That's what this data is like. I think data like that should obey the central limit theorem and be normally distributed. This data is not - not even close. And when you graph it out, this is what it looks like:

I'd really like to know if people agree with me that this data is not random, and if they agree it looks like part of a waveform. Of course it doesn't have to be both. The not randomness of it is more obvious when you focus in on one period at a time. For instance, look at days 46 to 63, below. See how the first half (days 46 to 54) is a mirror image of the second half (days 54 to 63):

Would that happen randomly? Now look at days 34 to 45.

See how it makes two slanted S shapes? And finally, check out the first 34 days:

That sure doesn't look like it's going up at a random rate. It looks like a steady, smooth curve. And the whole thing comes real close to a simple sine wave:

Please let me know what you think. After a few people have responded, I'll reveal what the data is, and everybody will say "holy @#&*" Thanks :)