Math Amateur

Gold Member

MHB

- 3,920

- 48

I am reading Bruce N. Coopersteins book: Advanced Linear Algebra (Second Edition) ... ...

I am focused on Section 10.3 The Tensor Algebra ... ...

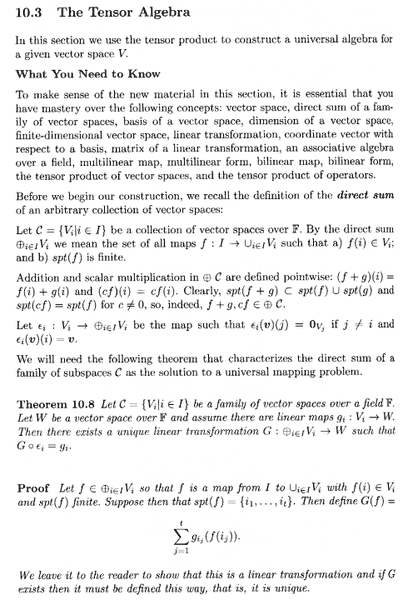

I need help in order to get a basic understanding of Theorem 10.8 which is a Theorem concerning the direct sum of a family of subspaces as a solution to a UMP ... the theorem is preliminary to tensor algebras ...

I am struggling to understand how the function ##G## as defined in the proof actually gives us ##G \circ \epsilon_i = g_i## ... ... if I see the explicit mechanics of this I may understand the functions involved better ... and hence the whole theorem better ...Theorem 10.8 (plus some necessary definitions and explanations) reads as follows:

In the above we read the following:" ... ... Then define##G(f) = \sum_{j = 1}^t g_{i_j} (f(i_j)) ##We leave it to the reader to show that this is a linear transformation and if ##G## exists then it must be defined in this way, that is, it is unique. ... ... "Can someone please help me to ...

(1) demonstrate explicitly, clearly and in detail that ##G(f) = \sum_{j = 1}^t g_{i_j} (f(i_j)) ## satisfies ##G \circ \epsilon_i = g_i## (if I understand the detail of this then I may well understand the functions involved better, and in turn, understand the theorem better ...)(2) show that ##G## is a linear transformation and, further, that if ##G## exists then it must be defined in this way, that is, it is unique.

Hope that someone can help ...

Peter

I am focused on Section 10.3 The Tensor Algebra ... ...

I need help in order to get a basic understanding of Theorem 10.8 which is a Theorem concerning the direct sum of a family of subspaces as a solution to a UMP ... the theorem is preliminary to tensor algebras ...

I am struggling to understand how the function ##G## as defined in the proof actually gives us ##G \circ \epsilon_i = g_i## ... ... if I see the explicit mechanics of this I may understand the functions involved better ... and hence the whole theorem better ...Theorem 10.8 (plus some necessary definitions and explanations) reads as follows:

In the above we read the following:" ... ... Then define##G(f) = \sum_{j = 1}^t g_{i_j} (f(i_j)) ##We leave it to the reader to show that this is a linear transformation and if ##G## exists then it must be defined in this way, that is, it is unique. ... ... "Can someone please help me to ...

(1) demonstrate explicitly, clearly and in detail that ##G(f) = \sum_{j = 1}^t g_{i_j} (f(i_j)) ## satisfies ##G \circ \epsilon_i = g_i## (if I understand the detail of this then I may well understand the functions involved better, and in turn, understand the theorem better ...)(2) show that ##G## is a linear transformation and, further, that if ##G## exists then it must be defined in this way, that is, it is unique.

Hope that someone can help ...

Peter