- #1

JimJCW

Gold Member

- 208

- 40

- TL;DR Summary

- Recently I noticed that when calculating Dnow and Dthen using Jorrie’s calculator, the result is slightly inconsistent with those obtained from Gnedin’s or Wright’s calculator. Could there be a glitch in Jorrie’s calculator?

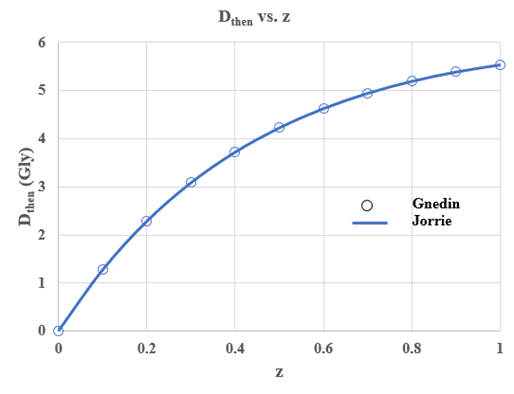

Jorrie’s LightCone7-2017-02-08 Cosmo-Calculator can be very useful and handy when making calculations based on the ΛCDM model. Recently I noticed that when calculating Dnow and Dthen using Jorrie’s calculator, the result is slightly, but persistently, inconsistent with those obtained from Gnedin’s or Wright’s calculator. An example is shown below:

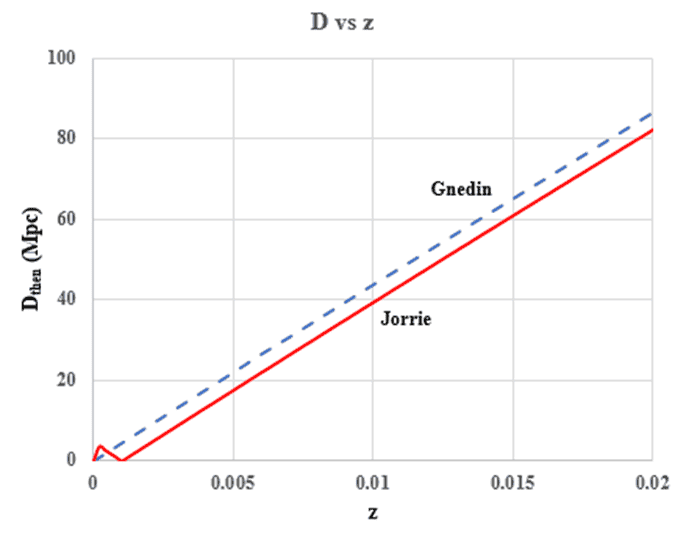

The inconsistency becomes more obvious for small z values. For example,

Based on the observation that the results from Gnedin’s and Wright’s calculators are consistent with each other, and that Jorrie’s result shown in the above figure is peculiar for small z values, one may wonder whether there is a glitch in Jorrie’s calculator. You can help by doing the following:

The inconsistency becomes more obvious for small z values. For example,

Based on the observation that the results from Gnedin’s and Wright’s calculators are consistent with each other, and that Jorrie’s result shown in the above figure is peculiar for small z values, one may wonder whether there is a glitch in Jorrie’s calculator. You can help by doing the following:

(1) Verify that there is such a peculiar result in Jorrie’s program and

(2) Contact @Jorrie about it if you know how.