- #1

- 18,994

- 23,995

Questions

1. Consider the ring ##R= C([0,1], \mathbb{R})## of continuous functions ##[0,1]\to \mathbb{R}## with pointwise addition and multiplication of functions. Set ##M_c:=\{f \in R\mid f(c)=0\}##.

(a) (solved by @mathwonk ) Show that the map ##c \mapsto M_c, c \in [0,1]## is a bijection between the interval ##[0,1]## and the maximal ideals of ##R##.

(b) Show that the ideal ##M_c## is not finitely generated.

Hint: Assume not and take a generating set ##\{f_1, \dots, f_n\}##. Consider the function ##f = |f_1| + \dots + |f_n|## and play with the function ##\sqrt{f}## to obtain a contradiction.2. We all know that the geometric mean is less than the arithmetic mean. I memorize it with ##3\cdot 5 < 4\cdot 4##. Now we consider the arithmetic-geometric mean ##M(a,b)## between the two others. Let ##a,b## be two nonnegative real numbers. We set ##a_0=a\, , \,b_0=b## and define the sequences ##(a_k)\, , \,(b_k)## by

$$

a_{k+1} :=\dfrac{a_k+b_k}{2}\, , \,b_{k+1}=\sqrt{a_kb_k}\quad k=0,1,\ldots

$$

Then the arithmetic-geometric mean ##M(a,b)## is the common limit

$$

\lim_{n \to \infty}a_n = M(a,b) = \lim_{n \to \infty}b_n

$$

It is not hard to show that both sequences converge and that their limit is the same by using the known inequality and the monotony of the sequences.

Prove that for positive ##a,b\in \mathbb{R}## holds

$$

T(a,b):=\dfrac{2}{\pi} \int_0^{\pi/2}\dfrac{d\varphi}{\sqrt{a^2\cos^2 \varphi +b^2 \sin^2 \varphi }} = \dfrac{1}{M(a,b)}

$$3. (solved by @StoneTemplePython ) If ##A,B,C,D## are four points in the plane, show that

$$

\operatorname{det} \begin{bmatrix}

0&1&1&1&1 \\ 1&0&|AB|^2&|AC|^2&|AD|^2 \\ 1&|AB|^2&0&|BC|^2&|BD|^2 \\ 1&|AC|^2&|BC|^2&0&|CD|^2 \\ 1&|AD|^2&|BD|^2&|CD|^2&0

\end{bmatrix} = 0

$$4. Let ##T \in \mathcal{B}(\mathcal{H}_1,\mathcal{H}_2)## a linear, continuous (= bounded) operator on Hilbert spaces.

Prove that the following are equivalent:

(a) ##T## is invertible.

(b) There exists a constant ##\alpha > 0##, such that ##T^*T\geq \alpha I_{\mathcal{H}_1}## and ##TT^*\geq \alpha I_{\mathcal{H}_2}\,.## ##A \geq B## means ##\langle (A-B)\xi\, , \,\xi \rangle \geq 0## for all ##\xi\,.##5. Let ##a,b \in \mathbb{F}## be non-zero elements in a field of characteristic not two. Let ##A## be the four dimensional ##\mathbb{F}-##space with basis ##\{\,1,\mathbf{i},\mathbf{j},\mathbf{k}\,\}## and the bilinear and associative multiplication defined by the conditions that ##1## is a unity element and

$$

\mathbf{i}^2=a\, , \,\mathbf{j}^2=b\, , \,\mathbf{ij}=-\mathbf{ji}=\mathbf{k}\,.

$$

Then ##A=\left( \dfrac{a,b}{\mathbb{F}} \right)## is called a (generalized) quaternion algebra over ##\mathbb{F}##.

Show that ##A## is a simple algebra whose center is ##\mathbb{F}##.6. Prove that the quaternion algebra ##\left( \dfrac{a,1}{\mathbb{F}} \right)\cong \mathbb{M}(2,\mathbb{F})## is isomorphic to the matrix algebra of ##2\times 2## matrices for every ##a\in \mathbb{F}-\{\,0\,\}\,.##7. (solved by @Not anonymous ) Show that there are infinitely many primes of the form ##4k+3\, , \,k\in \mathbb{N}_0##.8. (solved by @benorin ) Do ##\displaystyle{\sum_{n=0}^\infty}\, \dfrac{(-1)^n}{\sqrt{n+1}}## and the Cauchy product ##\left( \displaystyle{\sum_{n=0}^\infty}\, \dfrac{(-1)^n}{\sqrt{n+1}} \right)^2## converge or diverge?9. (solved by @DEvens ) Consider the curve ##\gamma\, : \,\mathbb{R}\longmapsto \mathbb{C}\, , \,\gamma(t)=\cos(\pi t)\cdot e^{\pi i t}\,.##

(a) Find the minimal period of ##\gamma##,

(b) prove that ##\gamma(\mathbb{R})\equiv\{\,(x,y)\in \mathbb{R}^2\,|\,x^2+y^2-x=0\,\}##,

(c) show that ##\gamma(\mathbb{R})## is symmetric to the ##x-##axis,

(d) parameterize ##\gamma## with respect to its arc length.10. (solved by @DEvens ) Let ##\gamma\, : \,I \longrightarrow \mathbb{R}^n## be a regular curve with unit tangent vector ##T=\dfrac{d}{dt} \gamma\,.## A (orthonormal) frame is a (smooth) ##C^\infty-## transformation ##F\, : \,I \longrightarrow \operatorname{SO}(n)## with ##F(t)e_1=T(t)## where ##\{\,e_i\,\}## is the standard basis of ##\mathbb{R}^n\,.## The pair ##(\gamma,F)## is called a framed curve, and the matrix ##A## given by ##\dfrac{d}{dt}F=F'=FA## is called derivation matrix of ##F##.

Let ##F_0\, : \,\mathbb{R}\longrightarrow \operatorname{SO}(n)## be a frame of a regular curve ##\gamma\, : \,\mathbb{R}\longrightarrow \mathbb{R}^n##. Show that

(a) If ##F\, : \,\mathbb{R}\longrightarrow \operatorname{SO}(n)## is another frame of ##\gamma##, then there exists a transformation ##\Phi\, : \,\mathbb{R}\longrightarrow \operatorname{SO}(n)## with ##\Phi(t)e_1=e_1## for all ##t\in \mathbb{R}## and ##F=F_0\Phi\,.##

(b) If on the other hand ##\Phi\, : \,\mathbb{R}\longrightarrow \operatorname{SO}(n)## is a smooth transformation with ##\Phi(t)e_1=e_1\,,## then ##F:=F_0\cdot\Phi## defines a new frame of ##\gamma##.

(c) If ##A_0## is the derivation matrix of ##F_0##, and ##A## the derivation matrix of the transformed frame ##F:=F_0\Phi## with ##\Phi## as above, then

$$

A=\Phi^{-1}A_0\Phi +\Phi^{-1}\Phi'

$$

11. (solved by @Not anonymous ) Show that the number of ways to express a positive integer ##n## as the sum of consecutive positive integers is equal to the number of odd factors of ##n##.12. (solved by @Not anonymous ) How many solutions in non-negative integers are there to the equation:

$$

x_1+x_2+x_3+x_4+x_5+x_6=32

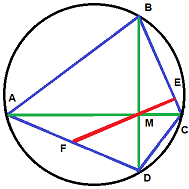

$$13. (solved by @etotheipi ) Let ##A, B, C## and ##D## be four points on a circle such that the lines ##AC## and ##BD## are perpendicular. Denote the intersection of ##AC## and ##BD## by ##M##. Drop the perpendicular from ##M## to the line ##BC##, calling the intersection ##E##. Let ##F## be the intersection of the line ##EM## and the edge ##AD##. Then ##F## is the midpoint of ##AD##.

14. (solved by @timetraveller123 ) Prove that every non negative natural number ##n\in \mathbb{N}_0## can be written as

$$

n=\dfrac{(x+y)^2+3x+y}{2}

$$

with uniquely determined non negative natural numbers ##x,y\in \mathbb{N}_0\,.##15. (solved by @odd_even ) Calculate

$$

S = \int_{\frac{1}{2}}^{3} \dfrac{1}{\sqrt{x^2+1}}\,\dfrac{\log(x)}{\sqrt{x}}\,dx \, + \, \int_{\frac{1}{3}}^{2} \dfrac{1}{\sqrt{x^2+1}}\,\dfrac{\log(x)}{\sqrt{x}}\,dx

$$

1. Consider the ring ##R= C([0,1], \mathbb{R})## of continuous functions ##[0,1]\to \mathbb{R}## with pointwise addition and multiplication of functions. Set ##M_c:=\{f \in R\mid f(c)=0\}##.

(a) (solved by @mathwonk ) Show that the map ##c \mapsto M_c, c \in [0,1]## is a bijection between the interval ##[0,1]## and the maximal ideals of ##R##.

(b) Show that the ideal ##M_c## is not finitely generated.

Hint: Assume not and take a generating set ##\{f_1, \dots, f_n\}##. Consider the function ##f = |f_1| + \dots + |f_n|## and play with the function ##\sqrt{f}## to obtain a contradiction.2. We all know that the geometric mean is less than the arithmetic mean. I memorize it with ##3\cdot 5 < 4\cdot 4##. Now we consider the arithmetic-geometric mean ##M(a,b)## between the two others. Let ##a,b## be two nonnegative real numbers. We set ##a_0=a\, , \,b_0=b## and define the sequences ##(a_k)\, , \,(b_k)## by

$$

a_{k+1} :=\dfrac{a_k+b_k}{2}\, , \,b_{k+1}=\sqrt{a_kb_k}\quad k=0,1,\ldots

$$

Then the arithmetic-geometric mean ##M(a,b)## is the common limit

$$

\lim_{n \to \infty}a_n = M(a,b) = \lim_{n \to \infty}b_n

$$

It is not hard to show that both sequences converge and that their limit is the same by using the known inequality and the monotony of the sequences.

Prove that for positive ##a,b\in \mathbb{R}## holds

$$

T(a,b):=\dfrac{2}{\pi} \int_0^{\pi/2}\dfrac{d\varphi}{\sqrt{a^2\cos^2 \varphi +b^2 \sin^2 \varphi }} = \dfrac{1}{M(a,b)}

$$3. (solved by @StoneTemplePython ) If ##A,B,C,D## are four points in the plane, show that

$$

\operatorname{det} \begin{bmatrix}

0&1&1&1&1 \\ 1&0&|AB|^2&|AC|^2&|AD|^2 \\ 1&|AB|^2&0&|BC|^2&|BD|^2 \\ 1&|AC|^2&|BC|^2&0&|CD|^2 \\ 1&|AD|^2&|BD|^2&|CD|^2&0

\end{bmatrix} = 0

$$4. Let ##T \in \mathcal{B}(\mathcal{H}_1,\mathcal{H}_2)## a linear, continuous (= bounded) operator on Hilbert spaces.

Prove that the following are equivalent:

(a) ##T## is invertible.

(b) There exists a constant ##\alpha > 0##, such that ##T^*T\geq \alpha I_{\mathcal{H}_1}## and ##TT^*\geq \alpha I_{\mathcal{H}_2}\,.## ##A \geq B## means ##\langle (A-B)\xi\, , \,\xi \rangle \geq 0## for all ##\xi\,.##5. Let ##a,b \in \mathbb{F}## be non-zero elements in a field of characteristic not two. Let ##A## be the four dimensional ##\mathbb{F}-##space with basis ##\{\,1,\mathbf{i},\mathbf{j},\mathbf{k}\,\}## and the bilinear and associative multiplication defined by the conditions that ##1## is a unity element and

$$

\mathbf{i}^2=a\, , \,\mathbf{j}^2=b\, , \,\mathbf{ij}=-\mathbf{ji}=\mathbf{k}\,.

$$

Then ##A=\left( \dfrac{a,b}{\mathbb{F}} \right)## is called a (generalized) quaternion algebra over ##\mathbb{F}##.

Show that ##A## is a simple algebra whose center is ##\mathbb{F}##.6. Prove that the quaternion algebra ##\left( \dfrac{a,1}{\mathbb{F}} \right)\cong \mathbb{M}(2,\mathbb{F})## is isomorphic to the matrix algebra of ##2\times 2## matrices for every ##a\in \mathbb{F}-\{\,0\,\}\,.##7. (solved by @Not anonymous ) Show that there are infinitely many primes of the form ##4k+3\, , \,k\in \mathbb{N}_0##.8. (solved by @benorin ) Do ##\displaystyle{\sum_{n=0}^\infty}\, \dfrac{(-1)^n}{\sqrt{n+1}}## and the Cauchy product ##\left( \displaystyle{\sum_{n=0}^\infty}\, \dfrac{(-1)^n}{\sqrt{n+1}} \right)^2## converge or diverge?9. (solved by @DEvens ) Consider the curve ##\gamma\, : \,\mathbb{R}\longmapsto \mathbb{C}\, , \,\gamma(t)=\cos(\pi t)\cdot e^{\pi i t}\,.##

(a) Find the minimal period of ##\gamma##,

(b) prove that ##\gamma(\mathbb{R})\equiv\{\,(x,y)\in \mathbb{R}^2\,|\,x^2+y^2-x=0\,\}##,

(c) show that ##\gamma(\mathbb{R})## is symmetric to the ##x-##axis,

(d) parameterize ##\gamma## with respect to its arc length.10. (solved by @DEvens ) Let ##\gamma\, : \,I \longrightarrow \mathbb{R}^n## be a regular curve with unit tangent vector ##T=\dfrac{d}{dt} \gamma\,.## A (orthonormal) frame is a (smooth) ##C^\infty-## transformation ##F\, : \,I \longrightarrow \operatorname{SO}(n)## with ##F(t)e_1=T(t)## where ##\{\,e_i\,\}## is the standard basis of ##\mathbb{R}^n\,.## The pair ##(\gamma,F)## is called a framed curve, and the matrix ##A## given by ##\dfrac{d}{dt}F=F'=FA## is called derivation matrix of ##F##.

Let ##F_0\, : \,\mathbb{R}\longrightarrow \operatorname{SO}(n)## be a frame of a regular curve ##\gamma\, : \,\mathbb{R}\longrightarrow \mathbb{R}^n##. Show that

(a) If ##F\, : \,\mathbb{R}\longrightarrow \operatorname{SO}(n)## is another frame of ##\gamma##, then there exists a transformation ##\Phi\, : \,\mathbb{R}\longrightarrow \operatorname{SO}(n)## with ##\Phi(t)e_1=e_1## for all ##t\in \mathbb{R}## and ##F=F_0\Phi\,.##

(b) If on the other hand ##\Phi\, : \,\mathbb{R}\longrightarrow \operatorname{SO}(n)## is a smooth transformation with ##\Phi(t)e_1=e_1\,,## then ##F:=F_0\cdot\Phi## defines a new frame of ##\gamma##.

(c) If ##A_0## is the derivation matrix of ##F_0##, and ##A## the derivation matrix of the transformed frame ##F:=F_0\Phi## with ##\Phi## as above, then

$$

A=\Phi^{-1}A_0\Phi +\Phi^{-1}\Phi'

$$

11. (solved by @Not anonymous ) Show that the number of ways to express a positive integer ##n## as the sum of consecutive positive integers is equal to the number of odd factors of ##n##.12. (solved by @Not anonymous ) How many solutions in non-negative integers are there to the equation:

$$

x_1+x_2+x_3+x_4+x_5+x_6=32

$$13. (solved by @etotheipi ) Let ##A, B, C## and ##D## be four points on a circle such that the lines ##AC## and ##BD## are perpendicular. Denote the intersection of ##AC## and ##BD## by ##M##. Drop the perpendicular from ##M## to the line ##BC##, calling the intersection ##E##. Let ##F## be the intersection of the line ##EM## and the edge ##AD##. Then ##F## is the midpoint of ##AD##.

14. (solved by @timetraveller123 ) Prove that every non negative natural number ##n\in \mathbb{N}_0## can be written as

$$

n=\dfrac{(x+y)^2+3x+y}{2}

$$

with uniquely determined non negative natural numbers ##x,y\in \mathbb{N}_0\,.##15. (solved by @odd_even ) Calculate

$$

S = \int_{\frac{1}{2}}^{3} \dfrac{1}{\sqrt{x^2+1}}\,\dfrac{\log(x)}{\sqrt{x}}\,dx \, + \, \int_{\frac{1}{3}}^{2} \dfrac{1}{\sqrt{x^2+1}}\,\dfrac{\log(x)}{\sqrt{x}}\,dx

$$

Last edited: