Discussion Overview

The discussion revolves around the modeling of two-phase flow in a packed bed using conservation equations, specifically focusing on the derivation of mass, momentum, and energy equations that account for phase changes in the fluid. Participants explore the complexities involved in simulating this system, including the need to track temperature and phase fronts over time.

Discussion Character

- Exploratory

- Technical explanation

- Conceptual clarification

- Debate/contested

- Mathematical reasoning

Main Points Raised

- One participant seeks to derive mass, momentum, and energy equations for two-phase flow, noting that existing equations for single-phase flow are insufficient.

- Another participant suggests starting with simpler models to understand the system before increasing complexity, emphasizing the importance of preliminary calculations.

- Participants discuss the need to consider system parameters such as diameter, packing type, void fraction, and flow direction in the modeling process.

- There is a proposal to model isothermal behavior under different conditions (all liquid vs. all gas) to understand residence time and pressure variations.

- One participant acknowledges the need to account for pressure drop and residence time in their models, questioning how to calculate these factors effectively.

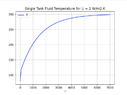

- Discussion includes the concept of lumped parameter models, with questions about how temperature and other properties behave under high axial dispersion conditions.

- Participants express intentions to formulate simplified models and share their approaches to the conservation equations.

Areas of Agreement / Disagreement

Participants generally agree on the need to start with simpler models before tackling the complexities of two-phase flow. However, there are multiple competing views on the best approach to modeling and the specific parameters to consider, leaving the discussion unresolved.

Contextual Notes

Participants mention various assumptions and conditions, such as the static nature of the packed bed and the potential significance of pressure drop, which may affect the modeling outcomes. The discussion also highlights the need for further exploration of lumped parameter models and their implications.

Who May Find This Useful

This discussion may be useful for researchers and practitioners interested in fluid dynamics, thermal management in packed beds, and the modeling of phase changes in porous media.