- #1

- 18,994

- 23,995

Questions

1. (solved by @nuuskur ) Let ##U\subseteq X## be a dense subset of a normed vector space, ##Y## a Banach space and ##A\in L(U,Y)## a linear, bounded operator. Show that there is a unique continuation ##\tilde{A}\in L(X,Y)## with ##\left.\tilde{A}\right|_U = A## and ##\|\tilde{A}\|=\|A\|\,.## (FR)

2. Let ##X\sim \mathcal{N}(\mu, \sigma^2)## and ##Y\sim \mathcal{N}(\lambda,\sigma^2)## be normally distributed random variables on ##\mathbb{R}## with expectation values ##\mu,\lambda \in \mathbb{R}## and standard deviation ##\sigma##. We want to test the hypothesis that ##\mu =\lambda## with ##n## independent measurements ##X_1,\ldots,X_n## and ##Y_1,\ldots, Y_n\,.## We choose the mean distance

$$

T_n(X_1,Y_1;X_2,Y_2;\ldots;X_n,Y_n) :=\dfrac{1}{n}\sum_{k=1}^n\left(X_k-Y_k\right)

$$

as estimator for the difference ## \nu=\mu-\lambda\,.##

a) Does the estimator ##T_n## have a bias and is it consistent?

b) Let ##n=100## and ##\sigma^2=0.5\,.## We use the hypotheses ##H_0\, : \,\mu=\lambda## and ##H_1\, : \,\mu\neq\lambda\,.## Determine a reasonable deterministic test ##\varphi## for the error level ##\alpha=0.05\,.## (FR)

3. (solved by @Antarres )

a) Solve ##y'' x^2-12y=0,\,y(0)=0,\,y(1)=16## and calculate

$$

\sum_{n=1}^\infty \dfrac{1}{t_n},\;t_n:=y(n)-\dfrac{1}{2}y'(n)+\dfrac{1}{8}y''(n)-\dfrac{1}{48}y'''(n)+\dfrac{1}{384}y^{(4)}(n).

$$

b) What do we get for the initial values ##y(1)=1,\,y(-1)=-1## and ##\displaystyle{\sum_{n=1}^\infty \left(y'(n)+y'''(n)\right)}?## (FR)

4. (solved by @Fred Wright ) Solve the initial value problem ##y'(x)=y(x)^2-(2x+1)y(x)+1+x+x^2## for ##y(0)\in \{\,0,1,2\,\}\,.## (FR)

5. (solved by @Lament ) For coprime natural numbers ##n,m## show that

$$

m^{\varphi(n)}+n^{\varphi(m)} \equiv 1 \operatorname{mod} nm

$$

(FR)

6. (solved by @Not anonymous ) If ##a_1,a_2 > 0## and ##m_1,m_2 \geq 0## with ##m_1 + m_2 = 1## show that ##a_1^{m_1}a_2^{m_2} < m_1 a_1 + m_2 a_2.## (QQ)

7. (solved by @Adesh ) Let ##f## be a three times differentiable function with ##f^{'''}## continuous at ##x = a## and ##f^{'''}(a) \neq 0##. Now, let's take the expression ##f(a + h) = f(a) + hf'(a) + \frac{h^2}{2!}f''(a + \theta h)## with ##0 < \theta < 1## which holds true for ##f##. Show that ##\theta## tends to ##\frac{1}{3}## as ##h \rightarrow 0.## (QQ)

8. (solved by @archaic ) How many real zeros does the polynomial ##p_n(x) = 1+x+x^2/2 + \ldots + x^n/n! ## have? (IR)

9. (solved by @Lament , @Adesh ) Let ##f:[0,1]\to [0,1]## be a continuous function such that ##f(f(f(x)))=x## for all ##x\in [0,1]##. Show that ##f## must be the identity function ##f(x)=x.## (IR)

10. (solved by @Not anonymous , @wrobel ) Let ##f:[0,1]\to\mathbb{R}## be a continuous function, differentiable on ##(0,1)## that satisfies ##f(0)=f(1)=0.## Prove that ##f(x)=f'(x)## for some ##x\in (0,1)##. Note that ##f'## is not assumed to be continuous. (IR)

High Schoolers only

High Schoolers only

11. (solved by @archaic ) Let ##P(x)=x^n+a_{n-1}x^{n-1}+\ldots +a_1x+a_0## a monic, real polynomial of degree ##n##, whose zeros are all negative.

Show that ##\displaystyle{\int_1^\infty}\dfrac{dx}{P(x)}## converges absolutely if and only if ##n\geq 2. ## (FR)

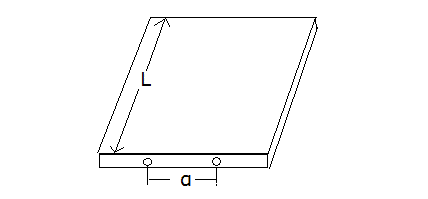

12. A table has an internal movable surface used for slicing bread. In order to be able to be pulled to the outside, there are two small handles on its front face with distance ##a## between them put in a symmetric way with respect to the middle of the surface. The length of the surface is ##L## (##Fig.1##). Find the minimum friction coefficient ##K## between the sides of the movable surface and the internal surface of the respective sides of the table, so that we can pull the movable surface using only the one handle whatever force magnitude (i.e. big force) we exert. (QQ)

##Fig.1##

13. a.) (solved by @Adesh ) Using only trigonometric substitutions find if ##\lim_{x\to\frac{\pi}{2}} \frac{x - \frac{\pi}{2}}{\sqrt{1 - \sin x}}## exists.

b.) (solved by @archaic ) Find ##\lim_{x\to\infty} [\ln(e^x + e^{-x}) - x]## (QQ)

14. (solved by @Not anonymous ) Let ##p## be a prime number all of whose digits are ##1##. Show that ##p## must have a prime number of digits. (IR)

15. (solved by @Not anonymous ) How many ways can you tile a ##2\times n## rectangle with ##1\times 2## and ##2\times 1## rectangles? (IR)

1. (solved by @nuuskur ) Let ##U\subseteq X## be a dense subset of a normed vector space, ##Y## a Banach space and ##A\in L(U,Y)## a linear, bounded operator. Show that there is a unique continuation ##\tilde{A}\in L(X,Y)## with ##\left.\tilde{A}\right|_U = A## and ##\|\tilde{A}\|=\|A\|\,.## (FR)

2. Let ##X\sim \mathcal{N}(\mu, \sigma^2)## and ##Y\sim \mathcal{N}(\lambda,\sigma^2)## be normally distributed random variables on ##\mathbb{R}## with expectation values ##\mu,\lambda \in \mathbb{R}## and standard deviation ##\sigma##. We want to test the hypothesis that ##\mu =\lambda## with ##n## independent measurements ##X_1,\ldots,X_n## and ##Y_1,\ldots, Y_n\,.## We choose the mean distance

$$

T_n(X_1,Y_1;X_2,Y_2;\ldots;X_n,Y_n) :=\dfrac{1}{n}\sum_{k=1}^n\left(X_k-Y_k\right)

$$

as estimator for the difference ## \nu=\mu-\lambda\,.##

a) Does the estimator ##T_n## have a bias and is it consistent?

b) Let ##n=100## and ##\sigma^2=0.5\,.## We use the hypotheses ##H_0\, : \,\mu=\lambda## and ##H_1\, : \,\mu\neq\lambda\,.## Determine a reasonable deterministic test ##\varphi## for the error level ##\alpha=0.05\,.## (FR)

3. (solved by @Antarres )

a) Solve ##y'' x^2-12y=0,\,y(0)=0,\,y(1)=16## and calculate

$$

\sum_{n=1}^\infty \dfrac{1}{t_n},\;t_n:=y(n)-\dfrac{1}{2}y'(n)+\dfrac{1}{8}y''(n)-\dfrac{1}{48}y'''(n)+\dfrac{1}{384}y^{(4)}(n).

$$

b) What do we get for the initial values ##y(1)=1,\,y(-1)=-1## and ##\displaystyle{\sum_{n=1}^\infty \left(y'(n)+y'''(n)\right)}?## (FR)

4. (solved by @Fred Wright ) Solve the initial value problem ##y'(x)=y(x)^2-(2x+1)y(x)+1+x+x^2## for ##y(0)\in \{\,0,1,2\,\}\,.## (FR)

5. (solved by @Lament ) For coprime natural numbers ##n,m## show that

$$

m^{\varphi(n)}+n^{\varphi(m)} \equiv 1 \operatorname{mod} nm

$$

(FR)

6. (solved by @Not anonymous ) If ##a_1,a_2 > 0## and ##m_1,m_2 \geq 0## with ##m_1 + m_2 = 1## show that ##a_1^{m_1}a_2^{m_2} < m_1 a_1 + m_2 a_2.## (QQ)

7. (solved by @Adesh ) Let ##f## be a three times differentiable function with ##f^{'''}## continuous at ##x = a## and ##f^{'''}(a) \neq 0##. Now, let's take the expression ##f(a + h) = f(a) + hf'(a) + \frac{h^2}{2!}f''(a + \theta h)## with ##0 < \theta < 1## which holds true for ##f##. Show that ##\theta## tends to ##\frac{1}{3}## as ##h \rightarrow 0.## (QQ)

8. (solved by @archaic ) How many real zeros does the polynomial ##p_n(x) = 1+x+x^2/2 + \ldots + x^n/n! ## have? (IR)

9. (solved by @Lament , @Adesh ) Let ##f:[0,1]\to [0,1]## be a continuous function such that ##f(f(f(x)))=x## for all ##x\in [0,1]##. Show that ##f## must be the identity function ##f(x)=x.## (IR)

10. (solved by @Not anonymous , @wrobel ) Let ##f:[0,1]\to\mathbb{R}## be a continuous function, differentiable on ##(0,1)## that satisfies ##f(0)=f(1)=0.## Prove that ##f(x)=f'(x)## for some ##x\in (0,1)##. Note that ##f'## is not assumed to be continuous. (IR)

11. (solved by @archaic ) Let ##P(x)=x^n+a_{n-1}x^{n-1}+\ldots +a_1x+a_0## a monic, real polynomial of degree ##n##, whose zeros are all negative.

Show that ##\displaystyle{\int_1^\infty}\dfrac{dx}{P(x)}## converges absolutely if and only if ##n\geq 2. ## (FR)

12. A table has an internal movable surface used for slicing bread. In order to be able to be pulled to the outside, there are two small handles on its front face with distance ##a## between them put in a symmetric way with respect to the middle of the surface. The length of the surface is ##L## (##Fig.1##). Find the minimum friction coefficient ##K## between the sides of the movable surface and the internal surface of the respective sides of the table, so that we can pull the movable surface using only the one handle whatever force magnitude (i.e. big force) we exert. (QQ)

##Fig.1##

13. a.) (solved by @Adesh ) Using only trigonometric substitutions find if ##\lim_{x\to\frac{\pi}{2}} \frac{x - \frac{\pi}{2}}{\sqrt{1 - \sin x}}## exists.

b.) (solved by @archaic ) Find ##\lim_{x\to\infty} [\ln(e^x + e^{-x}) - x]## (QQ)

14. (solved by @Not anonymous ) Let ##p## be a prime number all of whose digits are ##1##. Show that ##p## must have a prime number of digits. (IR)

15. (solved by @Not anonymous ) How many ways can you tile a ##2\times n## rectangle with ##1\times 2## and ##2\times 1## rectangles? (IR)

Last edited: