Understand the Acoustic Modulation vs. Beating Confusion

A long time ago I read a paper in the IEEEProceedings recounting the history of the superheterodyne receiver. Overall it was a very interesting and informative article, with one exception: in it the author remarked that the modulation (or mixing) principle was really nothing new, is already known to piano tuners who traditionally used a tuning fork to beat against the piano string’s vibrations. I did a double-take on this assertion and wrote the IEEE a letter to that effect, which was published, explaining why this interpretation was fallacious. Most unexpectedly, the author replied by contesting my explanation, maintaining that the beat frequency was indeed equivalent to the intermediate frequency (IF) formed when the radio frequency (RF) and local oscillator (LO) signals are mixed to form a difference-frequency signal. With no further letters supporting (or opposing) my viewpoint, it was left to the Proceedings readers to decide who was right. (I did get one private letter of support).

I thought maybe it was time to exhume the argument and so include those in the PF community who might have an interest and/or opinion in the subject.

Let me start by stating that modulation (a.k.a. mixing) is a nonlinear process while beating is a linear process. In physics, these are distinctly different processes.

The term “mixing” has a very specific meaning in radio parlance. Mixing two signals of differing frequencies f1 and f2 results in sidebands (f1 + f2) and |f1 – f2|. These are new signals at new frequencies. Depending on the mixing circuit there can be many higher sidebands as well but let’s assume a simple multiplier as the mixer:

Mixed signal = sin(ω1t)*sin(ω2t) = ½ cos(ω1 – ω2)t – ½ cos(ω1 + ω2)t, ω= 2πf.

Note that two new frequencies are produced. (The original frequencies are lost in this case but that does not always obtain; cf. below). Now consider two signals beating against each other. Let the tuning fork be at f1 and the piano string at f2; then:

Beat signal = sin(ω1t) + sin(ω2t). This can be rewritten as

Beat signal = [cos(ω1 – ω2)t/2][cos (ω1 + ω2)t/2].

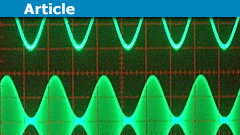

Now, this may look like the same two new signals generated by mixing. But that would be wrong. There are no new signals of frequencies (ω1+ω2) and |ω1 – ω2| generated. A look at a spectrum analyzer would quickly confirm this. (I am aware that the human ear does produce some distortion-generated higher harmonics, but these are small in a normal ear and certainly not what the piano tuner is listening to).

The beat signal is just the superposition of two signals of close-together frequencies. Assuming |f1 – f2| << f1, f2, the “carrier” frequency of the beat signal is at (f1 + f2)/2 and so approaches f1 = f2 when the piano string is perfectly strung, and the beat signal amplitude varies with a frequency of |f1 – f2|. |f1 – f2| can be extremely small before essentially disappearing altogether to the piano tuner, certainly, on the order of a fraction of 1 Hz It should be obvious that no human ear could detect a sound at that low a frequency (the typical human ear lower cutoff frequency is around 15-20 Hz).

This confusion is not helped by other authors of some repute. For example, my Resnick & Halliday introductory physics textbook describes the beating process as follows:

“This phenomenon is a form of amplitude modulation which has a counterpart (sidebands) in AM radio receivers”.

Most inapposite in a physics text! Beating produces no sidebands. A 550-1600 kHz AM signal is amplitude-modulated and of the form

[1 + a sin(ωmt)]sin(ωct)

which produces sidebands at (fc + fm) and (fc – fm) in addition to the retention of the carrier signal at fc. Here fc is the carrier (say 1 MHz “in the middle of your dial”), ωm is the modulating signal, and a is the modulation index, |a| < 1 . Of course, in a radio signal, asin(ωmt) is really a linear superposition of sinusoids, typically music and speech, in the range 50 – 2000 Hz, .

Read the next article on sound physics: Modulation vs. Beating Confusion

Comments welcome!

AB Engineering and Applied Physics

MSEE

Aerospace electronics career

Used to hike; classical music, esp. contemporary; Agatha Christie mysteries.

[QUOTE=”Averagesupernova, post: 5238614, member: 7949″]I read the whole article and I have come to the exact opposite conclusion you have. Granted when we speak of intermod we generally are referring to UNWANTED signals being generated. But I think that should be obvious in this discussion.[/QUOTE]

Granted on [I]Distortion [/I]being unimportant.

To me it’s obvious the diode rectifies an AC signal creating a pulsed DC signal that’s filtered (to remove the RF frequencies) to smooth the final resulting audio signal.

I read the whole article and I have come to the exact opposite conclusion you have. Granted when we speak of intermod we generally are referring to UNWANTED signals being generated. But I think that should be obvious in this discussion.

[QUOTE=”Averagesupernova, post: 5238334, member: 7949″]Not sure what your last post is getting at nsaspook. I find you contradict yourself.[/QUOTE]

Sorry if that’s happening.

I’m saying, just like the entry on Wiki that the generated intermodulation distortion from the diode envelope detector is not the same as heterodyne mixing.

Not sure what your last post is getting at nsaspook. I find you contradict yourself.

[QUOTE=”Averagesupernova, post: 5238277, member: 7949″]Yes it is. You cannot demodulate without a carrier. Envelope detector is a bit of a misnomer. The audio signal follows exactly what the envelope is but the carrier is required. Think of it this way. A detector used in this manner is a device that intentionally generates intermodulation distortion. Several signals come into the detector (sidebands and carrier) and they intermodulate to form the audio.[/QUOTE]

A envelope detector will generate audio from an voice SSB signal RF envelope, it just won’t be intelligible as human speech until there is a carrier added to modify the envelope.

“Intermodulation distortion” Yes it does but that’s not the same as saying it’s a mixer.

[URL]https://en.wikipedia.org/wiki/Intermodulation[/URL]

[quote]

IMD is also distinct from intentional modulation (such as a [URL=’https://en.wikipedia.org/wiki/Frequency_mixer’]frequency mixer[/URL] in [URL=’https://en.wikipedia.org/wiki/Superheterodyne_receiver’]superheterodyne receivers[/URL]) where signals to be modulated are presented to an intentional nonlinear element ([URL=’https://en.wikipedia.org/wiki/Analog_multiplier’]multiplied[/URL]). See [URL=’https://en.wikipedia.org/wiki/Non-linear’]non-linear[/URL][URL=’https://en.wikipedia.org/wiki/Electronic_mixer#Product_mixers’]mixers[/URL] such as mixer [URL=’https://en.wikipedia.org/wiki/Diode’]diodes[/URL] and even single-[URL=’https://en.wikipedia.org/wiki/Transistor’]transistor[/URL] oscillator-mixer circuits. However, while the intermodulation products of the received signal with the local oscillator signal are intended, superheterodyne mixers can, at the same time, also produce unwanted intermodulation effects from strong signals near in frequency to the desired signal that fall within the passband of the receiver.[/quote]

[QUOTE=”nsaspook, post: 5238236, member: 351035″]

Is it (the simple diode detector circuit) also a non-linear mixer when it demodulates the exact same signal (as on a spectrum analyzer) that’s received as modulated AM when it’s normally called and explained as an [URL=’https://en.wikipedia.org/wiki/Envelope_detector’]Envelope detector[/URL]?[/QUOTE]

Yes it is. You cannot demodulate without a carrier. Envelope detector is a bit of a misnomer. The audio signal follows exactly what the envelope is but the carrier is required. Think of it this way. A detector used in this manner is a device that intentionally generates intermodulation distortion. Several signals come into the detector (sidebands and carrier) and they intermodulate to form the audio.

[QUOTE=”Baluncore, post: 5237926, member: 447632″]The BFO generates a replacement for a suppressed or missing carrier. The frequency of the BFO is on one shoulder of the IF channel. The IF signal is usually multiplied by the BFO, but in a simple system the BFO is linearly added to the IF signal prior to a diode detector. A capacitor to ground forms the LPF needed to remove the IFs from the audio difference frequency before the audio amplifier input. The diode in that situation is not just an envelope detector, it is also a non-linear mixer.

[/QUOTE]

I think you have an important point on what functions are called vs what that do.

Is it (the simple diode detector circuit) also a non-linear mixer when it demodulates the exact same signal (as on a spectrum analyzer) that’s received as modulated AM when it’s normally called and explained as an [URL=’https://en.wikipedia.org/wiki/Envelope_detector’]Envelope detector[/URL]?

[QUOTE=”Baluncore, post: 5237926, member: 447632″]The BFO generates a replacement for a suppressed or missing carrier. The frequency of the BFO is on one shoulder of the IF channel. The IF signal is usually multiplied by the BFO, but in a simple system the BFO is linearly added to the IF signal prior to a diode detector. A capacitor to ground forms the LPF needed to remove the IFs from the audio difference frequency before the audio amplifier input. [I][B]The diode in that situation is not just an envelope detector, it is also a non-linear mixer.[/B][/I]

A similar technique was/is used to transmit a 1kHz master reference signal over frequency multiplexed telephone lines. The 1kHz was not transmitted directly but as the sum of two harmonics, of 2kHz and a 3kHz tones on one telephone line. At the receiver, the one signal with both harmonics was fed through a diode into a 1kHz filter. The output difference frequency of 1kHz generated by the non-linear diode, was independent of the phone line frequency multiplexers.[/QUOTE]

Concerning the bold and italicized, this is what I have been referring to in the case of the BFO. The oscillator signal and IF signal may be added together (summed) slightly up-stage of where they are actually heterodyned together but ultimately they need be to non-linearly mixed to obtain the audio. Thank you Baluncore. Also, a higher quality SSB or CW receiver is going use more than a simple diode detector and the IF and the BFO will remain separate up until said detector.

The BFO generates a replacement for a suppressed or missing carrier. The frequency of the BFO is on one shoulder of the IF channel. The IF signal is usually multiplied by the BFO, but in a simple system the BFO is linearly added to the IF signal prior to a diode detector. A capacitor to ground forms the LPF needed to remove the IFs from the audio difference frequency before the audio amplifier input. The diode in that situation is not just an envelope detector, it is also a non-linear mixer.

A similar technique was/is used to transmit a 1kHz master reference signal over frequency multiplexed telephone lines. The 1kHz was not transmitted directly but as the sum of two harmonics, of 2kHz and a 3kHz tones on one telephone line. At the receiver, the one signal with both harmonics was fed through a diode into a 1kHz filter. The output difference frequency of 1kHz generated by the non-linear diode, was independent of the phone line frequency multiplexers.

[QUOTE=”meBigGuy, post: 5237777, member: 391788″]Do most receivers do this at IF just before the detector? That makes the oscillator pretty simple. I agree that it is linear. You use to have to use them to insert a carrier for SSB (like the SX-43 I used to have).[/QUOTE]

A conversion set will usually insert the BFO signal after the last IF stage and just before the detector so the RF agc is not affected. In general for receive CW tone generation you don’t want non-linear ‘mixing’ or audio signal generation as that will tend to generate audio [I]harmonics[/I] that sound harsh instead of a mellow 700-1000Hz pure sinewave.

[QUOTE=”nsaspook, post: 5237428, member: 351035″]Maybe I’m wrong here but in the BFO example I used no new RF frequencies are generated, only an audio envelope caused by the summing of the two RF frequencies. The detector diode functions as a envelope detector in this circuit. With the RF L1 there is no path for an audio frequency signal to exist or to induce voltage into the diode to audio transformer path.[/QUOTE]

Do most receivers do this at IF just before the detector? That makes the oscillator pretty simple. I agree that it is linear. You use to have to use them to insert a carrier for SSB (like the SX-43 I used to have).

[QUOTE=”rude man, post: 5237336, member: 350494″]Yes, that seems to be the problem here. I thought I made it pretty clear what kind of “beats” I was referring to, but I also acknowledge that the term “beat” can include mixing. A clear example was already mentioned, to wit, the BFO, which of course is a mixing operation.

I do disagree totally with whoever thinks mixing is done in the ear to any audible extent. The lowest audible sound would have to be at the sum frequency, i.e. at twice the t-f frequency, which it clearly isn’t; or it would have to be a very high harmonic of the difference frequency, which still would be at a very low frequency, near the lower end of audibility, which again is not at all what the tuner hears. So please, folks, forget about nonlinear ear response! :smile:[/QUOTE]

Maybe I’m wrong here but in the BFO example I used no new RF frequencies are generated, only an audio envelope caused by the summing of the two RF frequencies. The detector diode functions as a envelope detector in this circuit. With the RF L1 there is no path for an audio frequency signal to exist or to induce voltage into the diode to audio transformer path.

But, I don’t see how the ear bears on this. A linear ear would still hear the amplitude changes causes by piano tuning. They would probably actually hear them better.

Pycho-acoustic and physical ear phenomena extend way past any simple non-linear ear characteristics. [B]Some people can’t hear the beating because they focus on the tones and don’t listen “past them” until trained. [/B] The filtering and processing of acoustic data by the brain is astounding. I could go into dozens of phenomena. From simply stated (like directional filtering or noise suppression) to complex (like sub-harmonic generation). Masking is a hugely significant ear characteristic (basis of mp3 compression). There is a huge difference between the raw acoustic data and the sounds we perceive. But, again, it is not pertinent to this thread. (but I love talking about it)

Well to a point yes we are probably talking past each other. I am pretty sure I have gotten you to believe that I understand all about AM, sidebands, mixing, heterodyning, beats (whether the word is used correctly or incorrectly), etc. and how those contrast summing. Concerning that, you are preaching to the choir. Where we differ is when the signal(s) get past the ear. I cannot prove my view either until someone has linear hearing. However, I will hold my ground about how things would sound a lot different if our hearing was linear.

Maybe we are talking past each other. I maintain that non linearity is not required to hear the beating of two linearly summed sine waves, and that said beating has no more to do with modulation than whistling a single tone has to do with SSB.

To try and say that I can’t prove that the effect is audible with linear hearing because no one has linear hearing is totally fallacious .

You can see the 1 second 0 to 2A amplitude variation on an oscilloscope, so of course you can hear them.

Whether hearing is linear or non linear is off-topic, and not related to the linear effects we are talking about. It is a completely different subject and way way more complex than we want to get into. We can even hear frequencies that don’t exist (missing fundamental), but that has nothing to do with this issue.

[QUOTE=”meBigGuy, post: 5233330, member: 391788″]Forget the meaning of beat. I agree that it gets thrown around in a way that confuses the issues. The question is whether the wah-wah effect of two sine waves is in anyway related to hetrodyning. [/QUOTE]

Again, you can’t say that until someone has linear hearing. Good luck with that.

[QUOTE=”meBigGuy, post: 5233325, member: 391788″][B]There is nothing non linear happening in the ear that is required to hear beating.[/B] If you used a perfectly linear microphone you would see the same thing. The envelope varies between 0 and 2A. That is what you hear. You can see it in a scope.[/quote]

You can’t actually say that since no one I know of has ears that hear in a linear manner. I am not saying a person would not hear any effect at all if hearing was linear but it likely would not be perceived as the same thing it is now.

[quote]

If you look at a spectrum, there are no new frequencies created by the summation. The two sine waves are the frequencies created by the modulation of a (x+y)/2 carrier. When you look at them in the time domain they look exactly like a modulated (x+y)/2 carrier. Those two frequencies are ALL THAT EXISTS. There is no energy at any other frequency, [B]and the envelope effect is detectable with a linear microphone.[/B]

[/quote]

Where have I said that if you look at the spectrum we would see more than 2 signals after said 2 signals have been summed?

[quote]

Just the fact that you sum the two frequencies creates the appearance of the results of modulation of a (x+y)/2 carrier. But, there is no spectral energy at (x+y)/2 (unless you want to venture into instantaneous frequency land)

In a room, when you move a microphone through it (forget the ear), you see peaks and valleys cause by summing of different phases. If you move through those at some rate v, then dopplar creates the equivalent of two tones (since there a different relative velocities to the reflective sources). Now, don’t tell me dopplar is modulation, because it creates the appearance of 2 tones in a microphone, and they also appear in the spectrum analysis of the microphone output.

You are not going to like this next paragraph at first. Your comments about a volume control are an interesting phenomenon. That is 1 frequency varying in amplitude. What does it look like in a spectrum analyzer. It appears as two sine waves (sidebands of the modulation). The original sine wave is the equivalent of the (x+y)/2 carrier in the original example, and the volume control is the (x-y)/2 modulating signal.

[/quote]

Why would I not like that paragraph? That is exactly what I would expect.

[quote]

This is really simple if you abandon preconceptions. Look at the trig identity and think about what it means:

[IMG]http://www.mathwords.com/s/s_assets/sum%20to%20product%20identities%20sin%20plus%20sin.gif[/IMG]

The left side is 2 sine waves summed, which are EXACTLY IDENTICAL to the right side product, which represents an (x+y)/2 carrier modulated by an (x-y)/2 signal. You can think of your volume control being varied at an (x-y)/2 rate as the modulator (which it actually is). The hard part is “what happened to the (x+y)/2 carrier when I modulated it”

[URL]http://hyperphysics.phy-astr.gsu.edu/hbase/sound/beat.html[/URL] (replace the ear with a linear microphone and the effect is the same)[/QUOTE]

Forget the meaning of beat. I agree that it gets thrown around in a way that confuses the issues. The question is whether the wah-wah effect of two sine waves is in anyway related to hetrodyning. It is absolutely not related. Two sine waves happen to also be the output of a certain modulation function. But they are not created by, nor do they represent, modulation.

[QUOTE=”Averagesupernova, post: 5233237, member: 7949″]You can claim to be rapidly and changing the volume of a tone with a volume control but this is not all that is happening. You ARE generating new frequencies at the rate you are moving the volume control. The same thing when you walk through the room in your example. But in the walk through example it is happening in the ear.[/QUOTE]

[B]There is nothing non linear happening in the ear that is required to hear beating.[/B] If you used a perfectly linear microphone you would see the same thing. The envelope varies between 0 and 2A. That is what you hear. You can see it in a scope.

If you look at a spectrum, there are no new frequencies created by the summation. The two sine waves are the frequencies created by the modulation of a (x+y)/2 carrier. When you look at them in the time domain they look exactly like a modulated (x+y)/2 carrier. Those two frequencies are ALL THAT EXISTS. There is no energy at any other frequency, [B]and the envelope effect is detectable with a linear microphone.[/B]

Just the fact that you sum the two frequencies creates the appearance of the results of modulation of a (x+y)/2 carrier. But, there is no spectral energy at (x+y)/2 (unless you want to venture into instantaneous frequency land)

In a room, when you move a microphone through it (forget the ear), you see peaks and valleys cause by summing of different phases. If you move through those at some rate v, then dopplar creates the equivalent of two tones (since there a different relative velocities to the reflective sources). Now, don’t tell me dopplar is modulation, because it creates the appearance of 2 tones in a microphone, and they also appear in the spectrum analysis of the microphone output.

You are not going to like this next paragraph at first. Your comments about a volume control are an interesting phenomenon. That is 1 frequency varying in amplitude. What does it look like in a spectrum analyzer. It appears as two sine waves (sidebands of the modulation). The original sine wave is the equivalent of the (x+y)/2 carrier in the original example, and the volume control is the (x-y)/2 modulating signal.

This is really simple if you abandon preconceptions. Look at the trig identity and think about what it means:

[IMG]http://www.mathwords.com/s/s_assets/sum%20to%20product%20identities%20sin%20plus%20sin.gif[/IMG]

The left side is 2 sine waves summed, which are EXACTLY IDENTICAL to the right side product, which represents an (x+y)/2 carrier modulated by an (x-y)/2 signal. You can think of your volume control being varied at an (x-y)/2 rate as the modulator (which it actually is). The hard part is “what happened to the (x+y)/2 carrier when I modulated it”

[URL]http://hyperphysics.phy-astr.gsu.edu/hbase/sound/beat.html[/URL] (replace the ear with a linear microphone and the effect is the same)

Had a look in a couple of text books after I got home. Malvino’s Electronic Principles 3rd Edition page 700 under a short paragraph about diode mixers states: “Incidentally, [I]heterodyning[/I] is another word for mix, and [I]beat frequency[/I] is synonymous with difference frequency. In Fig. 23-8 we are heterodyning two input signals to get a beat frequency of [I]Fx – Fy.[/I]” Fig. 23.8 shows a transistor mixer.

–

Bernard Grob’s Basic Television Principles and Servicing Fourth Edition page 287 talks about detecting the 4.5 MHz sound carrier. “The heterodyning action of the 45.75 MHz picture carrier beating with the 41.25 MHz center frequency of the sound signal results in the lower center frequency of the 4.5 MHz.” Right or wrong it is not uncommon to use the word BEAT when dealing with frequency mixing. Grobs TV book also talks about interference page 415: “As one example, the rf interference can beat with the local oscillator in the rf tuner to produce difference frequencies that are the the IF passband of the receiver.” There are other sections talking about various signals ‘beating’ together to form interference in the picture. Co-channel picture and sound carriers that ‘beat’ with the LO to cause interference. The word is thrown around pretty loosely.

–

Those were the first two books I picked up out of the many I still have. Didn’t look at the amateur radio books I have, but I am sure it has been mentioned there as well.

[QUOTE=”meBigGuy, post: 5233068, member: 391788″]That ear thing may be a real phenomenon, but is not the cause of the beat we hear. The beat we hear is the same as what we hear when we walk through a reflective room with a 1KHz tone playing. It is caused by actual increases and decreases in amplitude (wavelength of 1KHz = 1 foot). THERE IS NO NEW FREQUENCY. (well, not exactly, because dopplar from moving effectively changes the single tone to 2 tones)[/quote]

You can claim to be rapidly and changing the volume of a tone with a volume control but this is not all that is happening. You ARE generating new frequencies at the rate you are moving the volume control. The same thing when you walk through the room in your example. But in the walk through example it is happening in the ear.

[quote]

There is no non linearity of any kind involved (needed?) in the beating we hear when we sum two tones. PERIOD! It is detectable by a fully linear system.

[/quote]

Except the human each which is not linear.

[quote]

I repeat from my previous post: [B]You can create the two tones (x and y) by modulating an (x+y)/2 carrier with an (x-y)/2 signal. That action will produce two new tones, x, and y.[/B] If piano tuners were doing that then I would agree.

[/quote]

Are you claiming that I have said the following? Because I have not.

[quote]

[U][B]Saying two tones in ANY way represents a modulated signal is the same as saying 1 tone represents an SSB signal[/B][/U]. Is whistling a precursor to ssb modulation? After all, the signals [I]happen[/I] to look the same, just as in the piano tuner case.[/QUOTE]

They are not modulated for the same reason the carrier and the audio are not modulated until after the modulator/mixer stage. When the ear is involved, that is the modulator/mixer stage.

–

The problem with this is that you are making a comparison between a system where all the signals are measurable such as a mixer or modulator stage in radio equipment and a system where the products (new frequencies) are not measurable because they are generated in the ear. Yes there are similarities but I would hoped I have made it clear what happens where. Maybe I have failed in that.

[QUOTE=”Averagesupernova, post: 5232707, member: 7949″]When was it determined that it is the non-linearity of our ears that create the perception of a new signal with signals that are simply summed together and listened to?[/QUOTE]

That ear thing may be a real phenomenon, but is not the cause of the beat we hear. The beat we hear is the same as what we hear when we walk through a reflective room with a 1KHz tone playing. It is caused by actual increases and decreases in amplitude (wavelength of 1KHz = 1 foot). THERE IS NO NEW FREQUENCY. (well, not exactly, because dopplar from moving effectively changes the single tone to 2 tones)

There is no non linearity of any kind involved (needed?) in the beating we hear when we sum two tones. PERIOD! It is detectable by a fully linear system.

I repeat from my previous post: [B]You can create the two tones (x and y) by modulating an (x+y)/2 carrier with an (x-y)/2 signal. That action will produce two new tones, x, and y.[/B] If piano tuners were doing that then I would agree.

[U][B]Saying two tones in ANY way represents a modulated signal is the same as saying 1 tone represents an SSB signal[/B][/U]. Is whistling a precursor to ssb modulation? After all, the signals [I]happen[/I] to look the same, just as in the piano tuner case.

A slightly irreverent demonstration of beats.

[MEDIA=youtube]CuGEPkI117s[/MEDIA]

[URL]http://arxiv.org/pdf/1409.2384v1.pdf[/URL]

It IS in fact about semantics. You can define the word beat to mean whatever you want. The perception of the difference signal commonly referred to as a beat means that it actually is *there*. Now the nitpicking can start concerning where the *there* actually is. In the case of a couple of notes played on a synthesizer keyboard or piano, the new note is created in our ears due the nature of our hearing being logarithmic. I am sure I have seen in text books that frequency mixing and beating are the same thing. I am not really one to pick sides on semantics so I won’t make an argument either way. My nitpicking is as I preciously stated in this thread as well as other threads here on PF about frequency mixing and AM modulation being the same thing. If no new frequencies are created then it is neither.

–

Incidentally, the quote: [quote]in it the author remarked that the modulation (or mixing) principle was really nothing new, being already known to piano tuners who traditionally used a tuning fork to beat against the piano string’s vibrations. [/quote]

may have a little more validity than would appear. When was it determined that it is the non-linearity of our ears that create the perception of a new signal with signals that are simply summed together and listened to? Was this knowledge responsible for the idea of superhet? Who was the first person to understand that non-linearity is required?

[QUOTE=”meBigGuy, post: 5232384, member: 391788″]I disagree. I am in total support of rude man. Everything I am saying is in his paper.

When you linearly add two sine waves, as described in the OP paper, no new frequencies are created, so there is no way to create the equivalent of a IF frequency. The beats experienced during the tuning of a piano in no way represent a prior art with regard to a superhet architecture.

[B]

The summed signals only have the [I][U]appearance[/U][/I] of a modulated signal.[/B] [B]They were not created by modulation[/B]. They could be created by a true modulator that started with an (x+y)/2 carrier, but in that case the x and y frequencies would be newly created by the modulator.

Remember, the disagreement here is with regard to this sentence in an article discussing the[B] history of the superheterodyne receiver[/B]:

[B]”in it the author remarked that the modulation (or mixing) principle was really nothing new, being already known to piano tuners who traditionally used a tuning fork to beat against the piano string’s vibrations.”[/B]

The summed[B] time domain waveform ONLY APPEARS as a modulated signal[/B]. Its [B]method of creation[/B] is of no value in a superhet architecture since [B]no new frequencies are created in the frequency domain. [/B]That is a key point, and cannot be ignored. [B] In any truly modulated or mixed signal, new frequencies are actually created.[/B]

The beating of linearly summed sinewaves is in no way (either practically or mathematically) similar in principle to mixing or modulating to produce true new frequencies.

Fell free to write up the terms as you want, such that one could consider piano tuning beating in any way similar to superhet mixing or true modulation.

[B]J[COLOR=#b30000]ust because the beating signal looks like a signal created by modulation does not mean the process to create it in any way involved modulation.[/COLOR][/B][/QUOTE]

I agree with the idea. But I could understand someone including beating in their definition of modulation, basically using modulation as a catch all term for any signal “mixing”.

I don’t think that’s the way the definition should go. Modulation should not include beating, IMO. Words have meanings and meanings are particularly important in technical fields. But words are also defined by use, and I don’t hold myself up as an arbiter of use.

I do agree beating is not an example of prior art for non-linear mixing.

I disagree. I am in total support of rude man. Everything I am saying is in his paper.

When you linearly add two sine waves, as described in the OP paper, no new frequencies are created, so there is no way to create the equivalent of a IF frequency. The beats experienced during the tuning of a piano in no way represent a prior art with regard to a superhet architecture.

[B]

The summed signals only have the [I][U]appearance[/U][/I] of a modulated signal.[/B] [B]They were not created by modulation[/B]. They could be created by a true modulator that started with an (x+y)/2 carrier, but in that case the x and y frequencies would be newly created by the modulator.

Remember, the disagreement here is with regard to this sentence in an article discussing the[B] history of the superheterodyne receiver[/B]:

[B]”in it the author remarked that the modulation (or mixing) principle was really nothing new, being already known to piano tuners who traditionally used a tuning fork to beat against the piano string’s vibrations.”[/B]

The summed[B] time domain waveform ONLY APPEARS as a modulated signal[/B]. Its [B]method of creation[/B] is of no value in a superhet architecture since [B]no new frequencies are created in the frequency domain. [/B]That is a key point, and cannot be ignored. [B] In any truly modulated or mixed signal, new frequencies are actually created.[/B]

The beating of linearly summed sinewaves is in no way (either practically or mathematically) similar in principle to mixing or modulating to produce true new frequencies.

Fell free to write up the terms as you want, such that one could consider piano tuning beating in any way similar to superhet mixing or true modulation.

[B]J[COLOR=#b30000]ust because the beating signal looks like a signal created by modulation does not mean the process to create it in any way involved modulation.[/COLOR][/B]

This seems to be a semantic argument. It has far more to do with how we define terms than any actual disagreement.

Let’s talk about beating from the frequency domain perspective. If beating is like modulation, then there must be a carrier (real or suppressed) and sidebands. (and it turns out there are such, in a crazy sort of way)

[IMG]http://www.mathwords.com/s/s_assets/sum%20to%20product%20identities%20sin%20plus%20sin.gif[/IMG]

If you look at the trig function for adding two sinewaves (of equal amplitude), the right side represents a carrier of frequency (x+y)/2 being multiplied by a modulation function at (x-y)/2.

If you look at the summed signals (x and y) in the frequency domain, there has to be those two signals at x and y, and nothing else (because I am just linearly adding two sine waves). So, where are the carrier and sidebands?

Well, it turns out the two signals, x and y, ARE the sidebands, and the suppressed carrier (x+y/2) is halfway between them. It’s strange to think about it that way, but it is an accurate portrayal of what is actually happening.

That illustrates that beating causes no new frequencies, and is therefor totally useless as (and is totally distinct from) a mixing function. It does cause modulation. Beating is analogous to what happens when you create standing waves. When the waves are 180 out, they cancel, but no new frequencies are created. Think of what is happening as you walk through a room with a tone playing. (and think dopplar)

Therefor, any conclusion that beating caused by linearly adding two sinewaves is the same as, or even similar to, hetrodyning is totally incorrect.

[QUOTE=”Averagesupernova, post: 5231775, member: 7949″]

–

I think that the word beat was originally used interchangeably with mixing. A BFO used in a SSB or CW (morse) receiver is in fact mixed with the IF in order to generate an audio signal. It is NOT linear. The ear is in fact non-linear but we don’t beat a couple of MHz signals together in our ear to get an audible signal. The non-linear process has to occur in the radio, not the ear.[/QUOTE]

The signal AM demodulation process (envelope detector diode in this circuit) is non-linear but the actual BFO injection circuit is usually a simple linear signal injection (added to the antenna signal here) like in this simple crystal radio circuit.

[IMG]http://crystalradio.net/crystalsets/bfo/images/image005.gif[/IMG]

This subject has been beat to death with disagreement in each related thread here on PF. meBigGuy pretty much hit the nail on the head. Mixing and modulation are both multiplication. Depending on who you ask they are linear or non-linear. A true linear amplifier can have many signal input to it and will not generate new frequencies. So this implies to me that mixing and modulation are non-linear processes.

–

I think that the word beat was originally used interchangeably with mixing. A BFO used in a SSB or CW (morse) receiver is in fact mixed with the IF in order to generate an audio signal. It is NOT linear. The ear is in fact non-linear but we don’t beat a couple of MHz signals together in our ear to get an audible signal. The non-linear process has to occur in the radio, not the ear.

Look at the trig identities. Mixing is multiplication, beating is addition.

[IMG]http://www.mathwords.com/s/s_assets/sum%20to%20product%20identities%20sin%20plus%20sin.gif[/IMG]

[IMG]http://www.mathwords.com/p/p_assets/product%20to%20sum%20identities%20cos%20cos.gif[/IMG]

The heterodyne process is non-linear (mixing) but modulation can be both.

[URL]http://www.comlab.hut.fi/opetus/333/2004_2005_slides/modulation_methods.pdf[/URL]

Non-linear Ring Rodulator.

[IMG]https://upload.wikimedia.org/wikipedia/commons/thumb/c/cd/Ring_Modulator.PNG/300px-Ring_Modulator.PNG[/IMG]

or linear as with a AM modulator where we have a bandwidth amplitude that’s equal to the modulation signal that obeys the principle of superposition.

Yes, I agree that an audio ‘beat’ like when using a BFO on a Morse code receiver is not the same as a ‘mixed’ signal but ‘modulation’ in general is not restricted to superposition (or the lack of superposition) of signals.

Maybe we need to stop using the term “mixer”. To an audio engineer, mixing involves adding signals in a linear device, so as to prevent energy appearing at new frequencies. To a radio engineer, mixing involves multiplying signals in a non-linear device, so as to cause energy to appear at new frequencies.

Yes, that seems to be the problem here. I thought I made it pretty clear what kind of "beats" I was referring to, but I also acknowledge that the term "beat" can include mixing. A clear example was already mentioned, to wit, the BFO, which of course is a mixing operation.I do disagree totally with whoever thinks mixing is done in the ear to any audible extent. The lowest audible sound would have to be at the sum frequency, i.e. at twice the t-f frequency, which it clearly isn't; or it would have to be a very high harmonic of the difference frequency, which still would be at a very low frequency, near the lower end of audibility, which again is not at all what the tuner hears. So please, folks, forget about nonlinear ear response! :smile:

The ear responds in a non linear way, so that's where modulation must occur.I am familiar with both superhet's and piano tuning.See also http://www.indiana.edu/~audres/Publications/humes/papers/18_Humes.pdf

My understanding is that you are correct. Beating is linear, mixing is non-linear.