Find Out the Results Shown at 2017 CERN EPS Conference

The EPS conference was a week ago, and many new results and future plans were shown. A good general overview has been collected by Paris Sphicas in his Summary slides.

I had a look at some presentations and collected things I personally found interesting. Warning: The selection is heavily biased, I cannot cover everything, and I probably missed many interesting things.

Table of Contents

Detector development

4D tracking

Currently ATLAS and CMS have about 40 collisions per bunch crossing, and collect the signal from all these collisions together. They are spread out over 4-5 cm, but that still gives about 1 collision per 100 µm. Figuring out which track was associated to which collision point is challenging, and assigning things to the wrong point is an important issue in many analyses. With the planned HL-LHC upgrade, the experiments will get about 150 collisions per bunch crossing. The detectors get upgraded, but the conditions will get much more challenging. Currently the tracking detectors only record where a particle has been in space (“3D”). The different collisions happen distributed over something like 150 picoseconds. If the detectors could also record the time (“4D”), it would be much easier to assign these hits to the correct collision point. That would need new detectors, it is unlikely that it will make it for the HL-LHC upgrade, but future detectors could use it. CMS studies if they can get some more precise timing information from existing detector elements.

The LHCb trigger upgrade

The LHC has bunch crossings with a rate of 40 MHz. Storing the full detector information of every collision is impossible, so multiple trigger stages reduce the data rate. The detectors cannot even be read out fully at this rate. Initially, only parts of the detector are read out and analyzed in specialized hardware that mainly looks for high-energetic particles. If such a particle is present, the whole event is read out and studied on computers – here the event can be reconstructed in detail. This second step reduces the rate to something that can be analyzed by the scientists. ATLAS and CMS reduce the event rate to ~100 kHz in the first step and ~1 kHz in the second step. LHCb reduces it to 1 MHz and then to 12 kHz.

LHCb focuses on hadrons with a b or c quark. They are produced at a rate of ~2 MHz. The main result of the first trigger stage is “there is probably a meson with b or c” – but you cannot even read out all these events that could be interesting! The traditional approach would be to increase the readout a bit so the first stage can accept more events. LHCb will do something much more radical: Get rid of the first stage. Upgrade the detector (2019-2020) to read out everything, and feed everything into computers analyzing every event. To fully use this, increase the luminosity by a factor 5 with respect to the current rate.

That removes the first bottleneck. But there is still the second one: The rate written to disk for further analysis. It will be increased for the upgrade (planned: 40-100 kHz, or 2-5 GB/s), but ideally you would like to store everything, and you cannot – not even for the most interesting decays of these particles. Here the second change helps. Currently triggers are binary: Each trigger algorithm decides “this event we keep” or “this we don’t keep”, and you keep the event if at least one algorithm decided to keep it. LHCb will probably change this: The algorithms in the trigger will get upgraded to match the quality of the current algorithms the physics analyses use. Each event gets a weight of how interesting it is for further analysis, and then the trigger picks the most interesting ones. Ideally every analysis gets a dataset of the best events for their analysis.

Apart from the cost, the first change has only advantages – a computer analyzing the full event will do a much better job than some chips studying some small parts of the event data. The second (proposed) change is tricky, however. It applies a lot of the event selection directly in the trigger – which means the rejected events are gone, you cannot change the selection afterwards. If you realize some part of the detector calibration was not perfect in the past: Too bad, the events are gone. If you realize some selection should have been looser: the events are gone. If you need some special samples to study the background in your analysis: Better hope you planned for that in advance. I think it is a good step, as it will increase the sample size for many analyses a lot – but it will be a lot of work to do it properly.

Dark matter

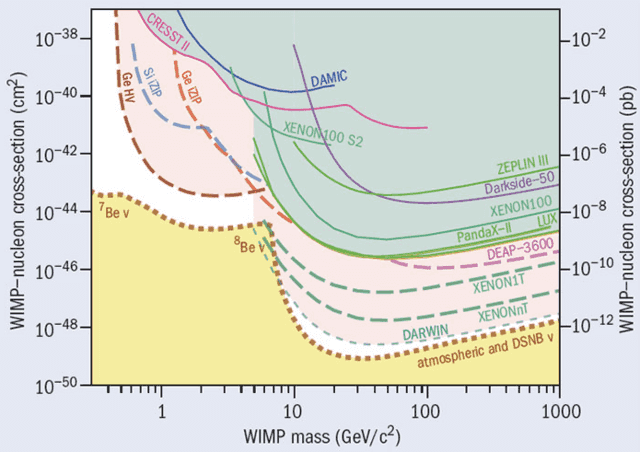

A crowded plot from a CERN courier article showing various exclusion limits for dark matter from different experiments. Everything above the curves is excluded, so lower is better. Dotted lines are future measurements. The yellow area at the bottom is the neutrino background discussed in the text. Note how DARWIN will reach it or get close to it, depending on the mass.

XENON 1T results

With just a month of data-taking, the exclusion limits are better than previous experiments already. The experiments are on a fast race towards the discovery or the exclusion of all the interesting cross section values. While XENON1T is taking more data, preparations for the next iteration, XENONnT, are already underway. 9 years ago XENON10 (10 kg) had an exclusion limit a factor 1000 worse, 5 years ago XENON100 had an exclusion limit a factor 25 worse. A few other experiments published similar limits at similar times. Roughly every year the sensitivity is doubled. Reminds of Moore’s law – just faster.

DARWIN plans to continue this trend, with a planned sensitivity a factor 500 better than the current limit from XENON1T. With the scaling from above, we would expect that for 2026. Quite close: Data-taking is expected to start 2023, and reaching the full sensitivity will need about 7 years.

The presentation calls it “the ultimate dark matter detector” for a good reason: It will be the last detector following this rapid trend. Currently the detectors improve their sensitivity by increasing the detector mass (more targets where dark matter can scatter at) and reducing the background – which means filtering out radioactive nuclei even better than before. But there is a background you cannot reduce: neutrinos interacting with the detector. DARWIN will be the first detector to have this as relevant background. Instead of “if we see events we have dark matter” you get “we see events, and we don’t know precisely how many of them are from neutrinos”. That will slow down future progress a lot. On the positive side, DARWIN can contribute to neutrino physics.

Collider physics

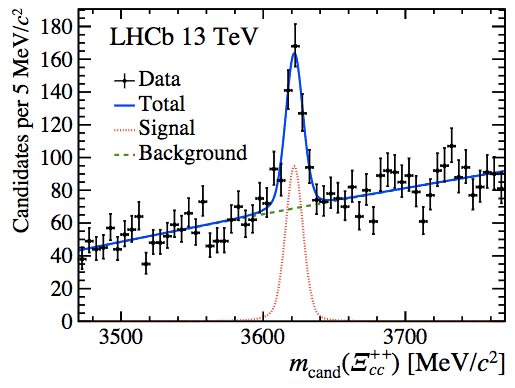

Invariant mass spectrum of Ξcc++ candidates from the LHCb publication. The peak coming from the new particle is clearly visible.

Flavor physics

LHCb found Ξcc++, the first baryon with two heavy quarks (2 charm plus 1 up). It was expected to exist, but the production of two charm quarks together (not charm+anticharm!) is very rare.

There were a few talks about the deviations seen in b→sμμ decays, see this separate thread.

Higgs

We got more precise mass estimates, see this thread.

ATLAS sees H→bb decays (3.6 sigma), while CMS updated its H→ττ measurement: Now 4.9 sigma with Run 2. The summary slides claim 5.9 sigma if we combine Run 1 and Run 2 data.

Various other Higgs measurements, reducing the uncertainty of existing measurements.

All in agreement with expectations, nothing unusual here.

Other Standard Model measurements

They rarely get attention, but without them nothing would work – you have to understand the Standard Model to search for deviations from it. And I think the set of measurements here is quite remarkable. To get an idea of the amount measurements, see this summary plot from ATLAS or the similar plot from CMS.

Other searches for new physics

SUSY and more exotic models. Nothing remarkable here, just better exclusion limits in various measurements. A summary can be found in this talk.

Things not covered

To measure gravitational waves, the two LIGO detectors will continue to increase their precision, while VIRGO joined for a first short test run already and plans to join regular data-taking soon. More detectors will join in the future. LISA was selected as mission by ESA – with a timescale of 2034. There were also various heavy ion and neutrino measurements, but as far as I can see they were all slight improvements over previous measurements or future plans. Future experiments will be able to determine the neutrino mass order (2 light and 1 heavy or 1 light and 2 heavy?) and ultimately measure absolute neutrino masses, but this is many years away. There is some weak evidence that CP violation for neutrinos is very large, but larger datasets are needed to confirm this.

Working on a PhD for one of the LHC experiments. Particle physics is great, but I am interested in other parts of physics as well.

European Physical Society. The first sentence has a link already where you can find this. It doesn’t really matter because it is an international conference anyway.

Interesting reading. Thanks @mfb. But I never did learn what EPS stands for. Perhaps you could add it to the opening sentence.

Thanks for the great summary of the EPS conference!

Well, independent of the exact combinations we have available: ##H to tau tau## has been observed clearly. ##H to bb## has some notable evidence already, and it will probably follow as soon as CMS gives an update with 2016 data.

Then we will see the Higgs decays in more production modes and more accurate measurements, but no new decay channels until the HL-LHC runs.

The decay to gluons is completely indistinguishable from background. The decay to charm is impossible to see as well – a factor 10 smaller branching fraction and a factor 10 higher background than bottom.

##Hto Z gamma## will go from upper limits to measured branching fractions after Run 2 (2018), but with an expected significance of something like 1.3 sigma. Here is an ATLAS study, predicting 2.3 sigma with 300/fb (2023) and 3.9 with 3000/fb (~2035).

##H to mu mu## looks a bit better, with the HL-LHC dataset it is expected that both ATLAS and CMS get a bit over 5 sigma.

There are also more exotic decays to specific hadrons, but they are rare to be found even with the HL-LHC (assuming Standard Model branching fractions).

Oh, I hadn't checked the first link… As far as I understood in slide 7 they mention the combined ATLAS+CMS significance (5.5)? Although I might be wrong right now (tired and the slides are not very clear).

For me, an interesting approach is how they estimated the Ztautau background (by replacing the muons of Zmumu with taus)… Similar approaches I've seen by SUSY guys when they want to estimate the Znunu+jets background (by adding the muons' pT of a Zmumu region to missing momentum)

4.9 with Run 2 alone.

The linked summary slides claim 5.9 sigma in a combination of Run 1 and Run 2 (slide 7), but I don't see that combination in a CMS talk.

while CMS updated its H→ττH to tau tau measurement: Now 5.9 sigma.just a typo correction: 4.9 sigma… not that it makes much difference but I was like "so it was 'discovered' just by CMS?!".